Wrapper’s in Clojure Ring

Notes

Notes

In this post I&aposll give updates about open source I worked on during January and February 2026.

To see previous OSS updates, go here.

I&aposd like to thank all the sponsors and contributors that make this work possible. Without you, the below projects would not be as mature or wouldn&apost exist or be maintained at all! So a sincere thank you to everyone who contributes to the sustainability of these projects.

Current top tier sponsors:

Open the details section for more info about sponsoring.

If you want to ensure that the projects I work on are sustainably maintained, you can sponsor this work in the following ways. Thank you!

Babashka Conf 2026 is happening on May 8th in the OBA Oosterdok library in Amsterdam! David Nolen, primary maintainer of ClojureScript, will be our keynote speaker! We&aposre excited to have Nubank, Exoscale, Bob and Itonomi as sponsors. Wendy Randolph will be our event host / MC / speaker liaison :-). The CfP is now closed. More information here. Get your ticket via Meetup.com (there is a waiting list, but more places may become available). The day after babashka conf, Dutch Clojure Days 2026 will be happening, so you can enjoy a whole weekend of Clojure in Amsterdam. Hope to see many of you there!

I spent a lot of time making SCI&aposs deftype, case, and macroexpand-1 match JVM Clojure more closely. As a result, libraries like riddley, cloverage, specter, editscript, and compliment now work in babashka.

After seeing charm.clj, a terminal UI library, I decided to incorporate JLine3 into babashka so people can build terminal UIs. Since I had JLine anyway, I also gave babashka&aposs console REPL a major upgrade with multi-line editing, tab completion, ghost text, and persistent history. A next goal is to run rebel-readline + nREPL from source in babashka, but that&aposs still work in progress (e.g. the compliment PR is still pending).

I&aposve been working on async/await support for ClojureScript (CLJS-3470), inspired by how squint handles it. I also implemented it in SCI (scittle, nbb etc. use SCI as a library), though the approach there is different since SCI is an interpreter.

Last but not least, I started cream, an experimental native binary that runs full JVM Clojure with fast startup using GraalVM&aposs Crema. Unlike babashka, it supports runtime bytecode generation (definterface, deftype, gen-class). It currently depends on a fork of Clojure and GraalVM EA, so it&aposs not production-ready yet.

Here are updates about the projects/libraries I&aposve worked on in the last two months in detail.

NEW: cream: Clojure + GraalVM Crema native binary

eval, require, and library loadingdefinterface, deftype, gen-class, and other constructs that generate JVM bytecode at runtime.java source files directly, as a fast alternative to JBangbabashka: native, fast starting Clojure interpreter for scripting.

bb repl) improvements: multi-line editing, tab completion, ghost text, eldoc, doc-at-point (C-x C-d), persistent historydeftype with map interfaces (e.g. IPersistentMap, ILookup, Associative). Libraries like core.cache and linked now work in babashka.babashka.terminal namespace that exposes tty?deftype supports Object + hashCodereify with java.time.temporal.TemporalQueryreify with methods returning int/short/byte/floatSCI: Configurable Clojure/Script interpreter suitable for scripting

deftype now macroexpands to deftype*, matching JVM Clojure, enabling code walkers like riddleycase now macroexpands to JVM-compatible case* format, enabling tools like riddley and cloverageasync/await in ClojureScript. See docs.defrecord now expands to deftype* (like Clojure), with factory fns emitted directly in the macro expansionmacroexpand-1 now accepts an optional env map as first argumentproxy-super, proxy-call-with-super, update-proxy and proxy-mappingsthis-as in ClojureScriptclj-kondo: static analyzer and linter for Clojure code that sparks joy.

@jramosg, @tomdl89 and @hugod have been on fire with contributions this period. Six new linters!

:duplicate-refer which warns on duplicate entries in :refer of :require (@jramosg):aliased-referred-var, which warns when a var is both referred and accessed via an alias in the same namespace (@jramosg):is-message-not-string which warns when clojure.test/is receives a non-string message argument (@jramosg):redundant-format to warn when format strings contain no format specifiers (@jramosg):redundant-primitive-coercion to warn when primitive coercion functions are applied to expressions already of that type (@hugod)array, class, inst and type checking support for related functions (@jramosg)clojure.test functions and macros (@jramosg):condition-always-true linter to check first argument of clojure.test/is (@jramosg):redundant-declare which warns when declare is used after a var is already defined (@jramosg)pmap and future-related functions (@jramosg)squint: CLJS syntax to JS compiler @tonsky and @willcohen contributed several improvements this period.

squint.math, also available as clojure.math namespacecompare-and-swap!, swap-vals! and reset-vals! (@tonsky)dotimes with _ binding (@tonsky)shuffle not working on lazy sequences (@tonsky):require-macros with :refer now accumulate instead of overwriting (@willcohen)-0.0)prn js/undefined as nilyield* IIFEscittle: Execute Clojure(Script) directly from browser script tags via SCI

async/await. See docs.js/import not using evalthis-as#<Promise value> when a promise is evaluatednbb: Scripting in Clojure on Node.js using SCI

(js/Promise.resolve 1) ;;=> #<Promise 1>fs - File system utility library for Clojure

clerk: Moldable Live Programming for Clojure

neil: A CLI to add common aliases and features to deps.edn-based projects.

neil test now exits with non-zero exit code when tests failcherry: Experimental ClojureScript to ES6 module compiler

:require-macros clauses with :refer now properly accumulate instead of overwriting each otherContributions to third party projects:

cli/cli to cli/parse-opts, bumped riddleyThese are (some of the) other projects I&aposm involved with but little to no activity happened in the past month.

So I came across this (quite old) post on stackoverflow, someone wanted to print a list next to a string and he was struggling with it

I would like to print a list along with a string identifier like

list = [1, 2, 3]

IO.puts "list is ", list

This does not work. I have tried few variations like

# this prints only the list, not any strings

IO.inspect list

# using puts which also doesElixir surely have a function that convert a lists to string, and it does List.to_string

But to my surprise List.to_string was doing weird things

iex(42)> [232, 137, 178] |> List.to_string

<<195, 168, 194, 137, 194, 178>>

iex(43)> [1, 2, 3] |> List.to_string

<<1, 2, 3>>

That was not what I was expecting, I was expecting a returned value that is similar to what IO.inspect produce

So to verify my expectation, I checked what clojure does, because clojure to me is the epitome of sanity

Clojure 1.12.4

user=> (str '(232 137 178))

"(232 137 178)"

user=> (str '(1 2 3))

"(1 2 3)"

it was more or less what IO.inspect does

it returns something that look like how lists "look like" as code

So next step was more introspection

iex(47)> [232, 137, 178] |> List.to_string |> i

Term

<<195, 168, 194, 137, 194, 178>>

Data type

BitString

Byte size

6

Description

This is a string: a UTF-8 encoded binary. It's printed with the `<<>>`

syntax (as opposed to double quotes) because it contains non-printable

UTF-8 encoded code points (the first non-printable code point being

`<<194, 137>>`).

Reference modules

String, :binary

Implemented protocols

Collectable, IEx.Info, Inspect, JSON.Encoder, List.Chars, String.Chars

What the heck is BitString?

So I kept fiddling with the function, until I finally got it

iex(44)> ["o","m",["z","y"]] |> List.to_string

"omzy"

iex(45)> ["o","m",["z","y"]] |> IO.inspect

["o", "m", ["z", "y"]]

["o", "m", ["z", "y"]]

List.to_string , does not transform a list to a string (preserving its structure), List.to_string flattens a list, take each element and transform it to a UTF-8 code point, and if you try to print that, you will get whatever string those codes points produce

iex(49)> [232, 137, 178] |> List.to_string |> IO.puts

è²

:ok

iex(50)> [91, 50, 51, 50, 44, 32, 49, 51, 55, 44, 32, 49, 55, 56, 93] |> List.to_string |> IO.puts

[232, 137, 178]

:ok

In retrospect this is what the docs says

but well, the docs wasn't telling what I wanted it to say 😀

And oh, before I forget, Elixir have the inspect function, which does exactly was I thought List.to_string would do

iex(53)> [232, 137, 178] |> inspect |> IO.puts

[232, 137, 178]

:ok

iex(54)> ["o","m",["z","y"]] |> inspect |> IO.puts

["o", "m", ["z", "y"]]

:ok

Translations: Russian

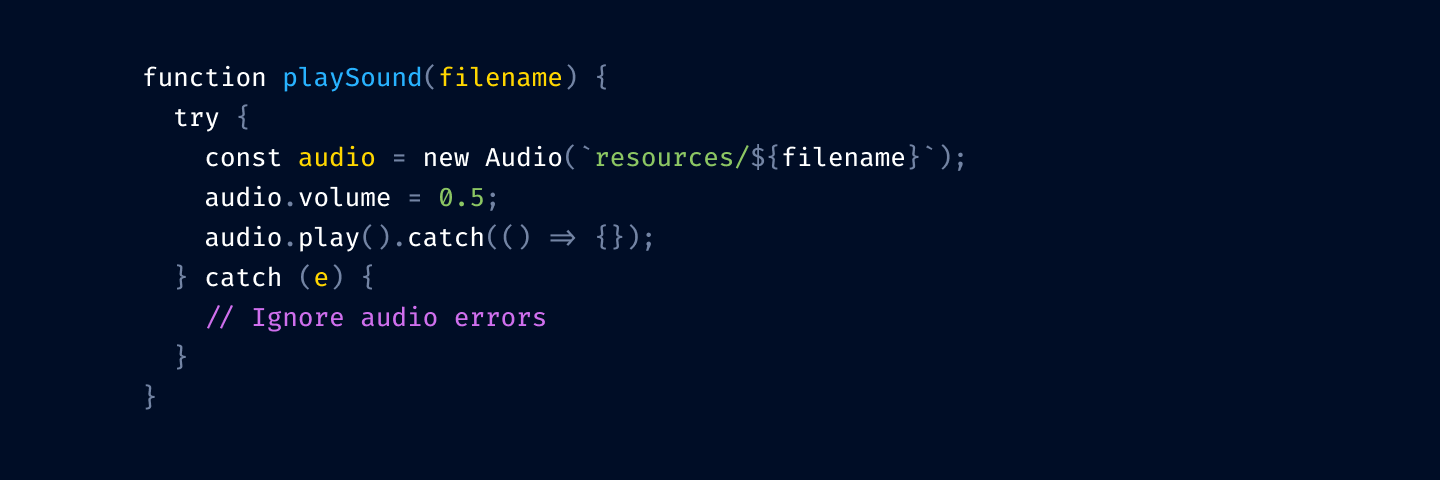

Syntax highlighting is a tool. It can help you read code faster. Find things quicker. Orient yourself in a large file.

Like any tool, it can be used correctly or incorrectly. Let’s see how to use syntax highlighting to help you work.

Most color themes have a unique bright color for literally everything: one for variables, another for language keywords, constants, punctuation, functions, classes, calls, comments, etc.

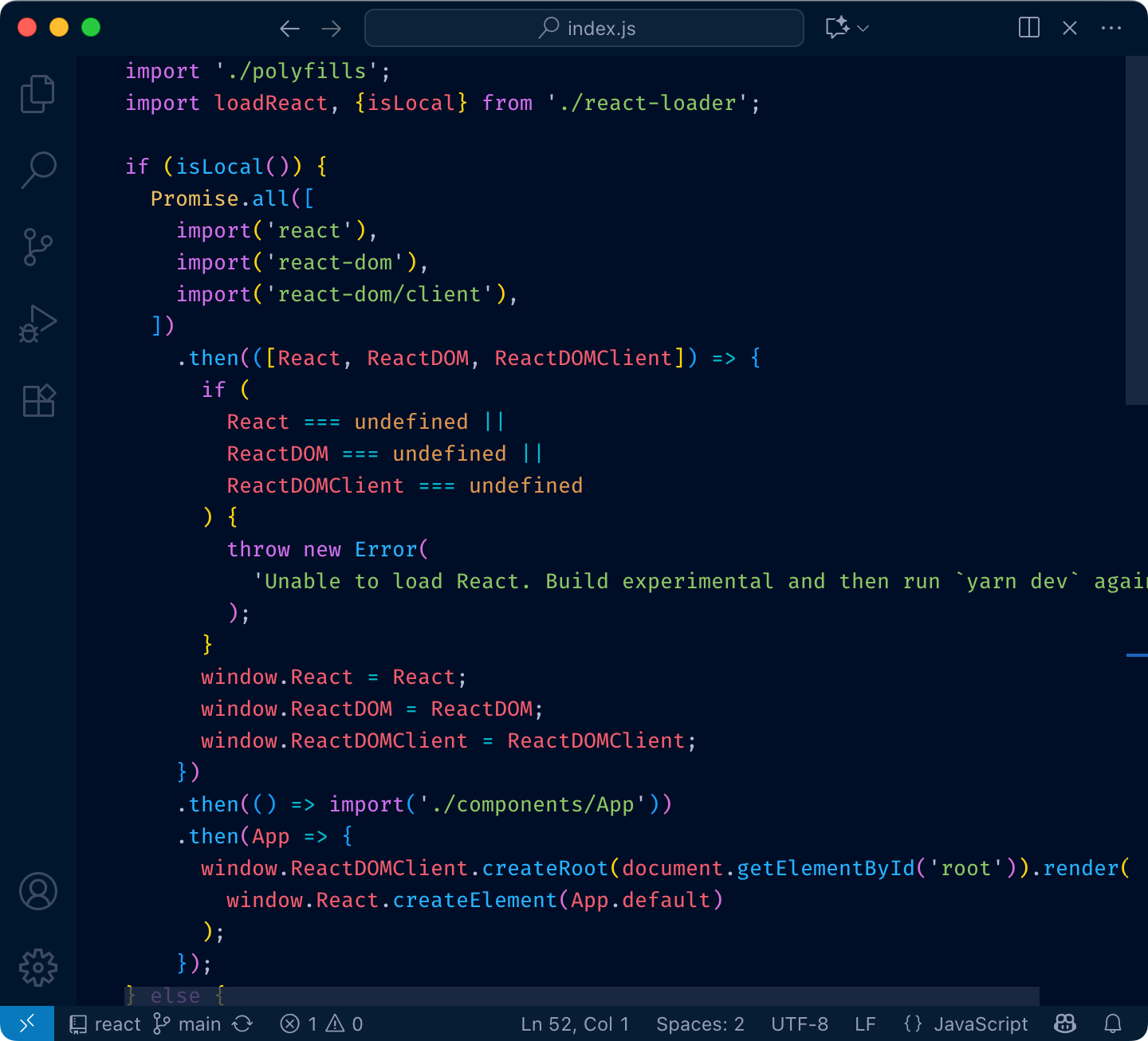

Sometimes it gets so bad one can’t see the base text color: everything is highlighted. What’s the base text color here?

The problem with that is, if everything is highlighted, nothing stands out. Your eye adapts and considers it a new norm: everything is bright and shiny, and instead of getting separated, it all blends together.

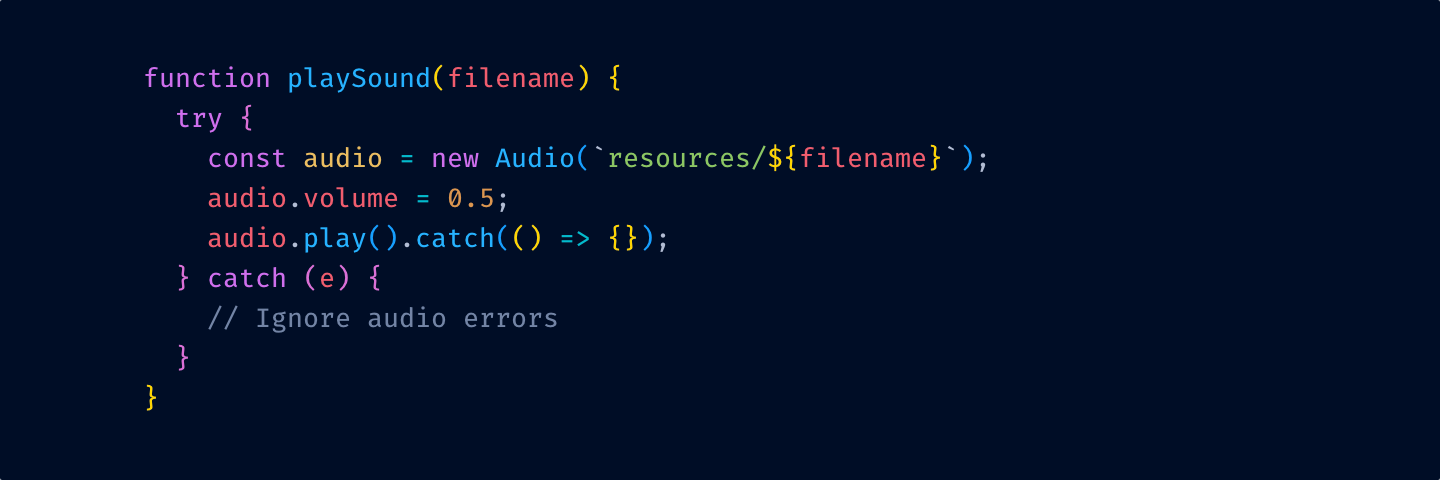

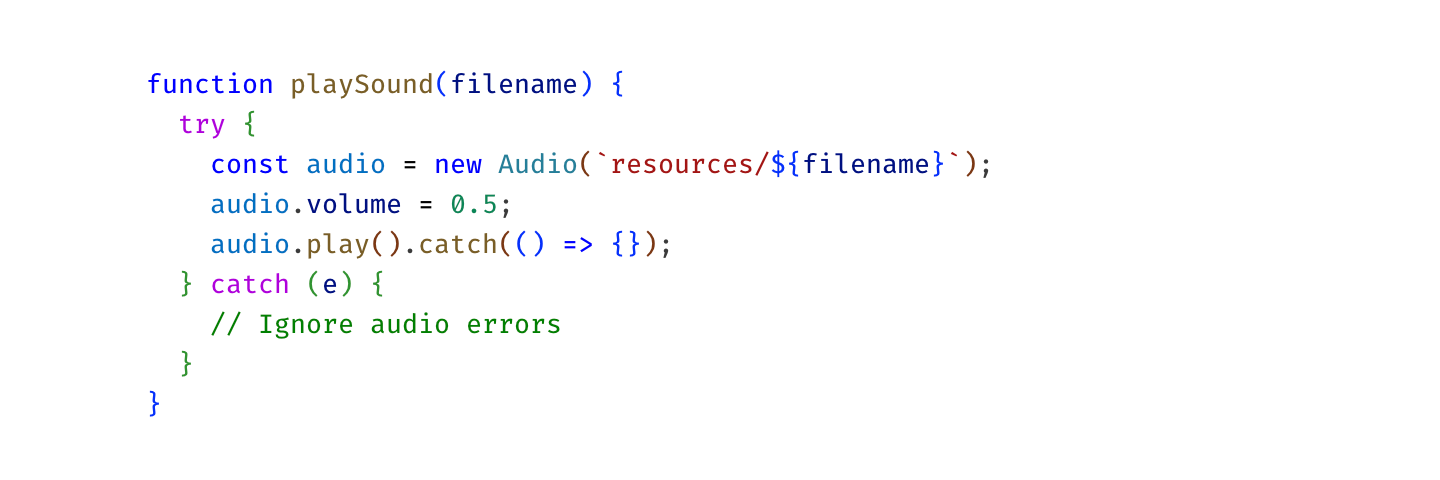

Here’s a quick test. Try to find the function definition here:

and here:

See what I mean?

So yeah, unfortunately, you can’t just highlight everything. You have to make decisions: what is more important, what is less. What should stand out, what shouldn’t.

Highlighting everything is like assigning “top priority” to every task in Linear. It only works if most of the tasks have lesser priorities.

If everything is highlighted, nothing is highlighted.

There are two main use-cases you want your color theme to address:

1 is a direct index lookup: color → type of thing.

2 is a reverse lookup: type of thing → color.

Truth is, most people don’t do these lookups at all. They might think they do, but in reality, they don’t.

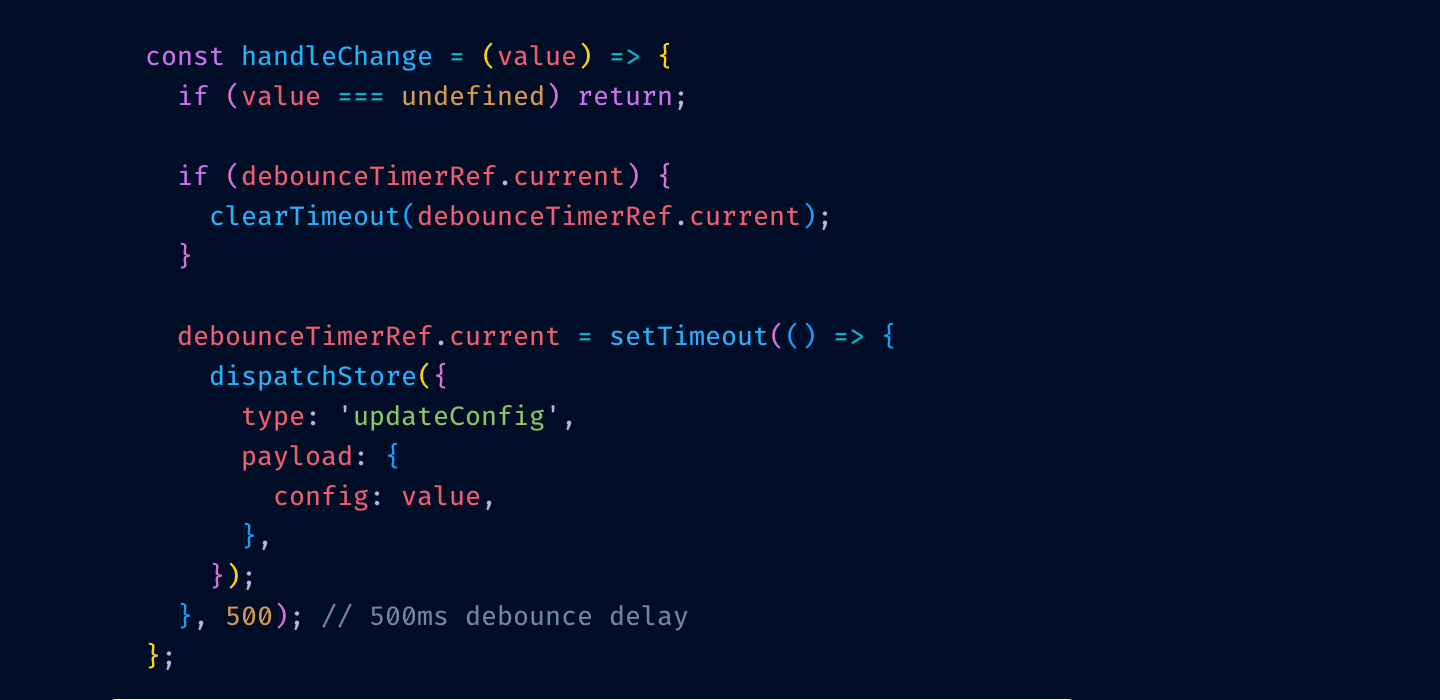

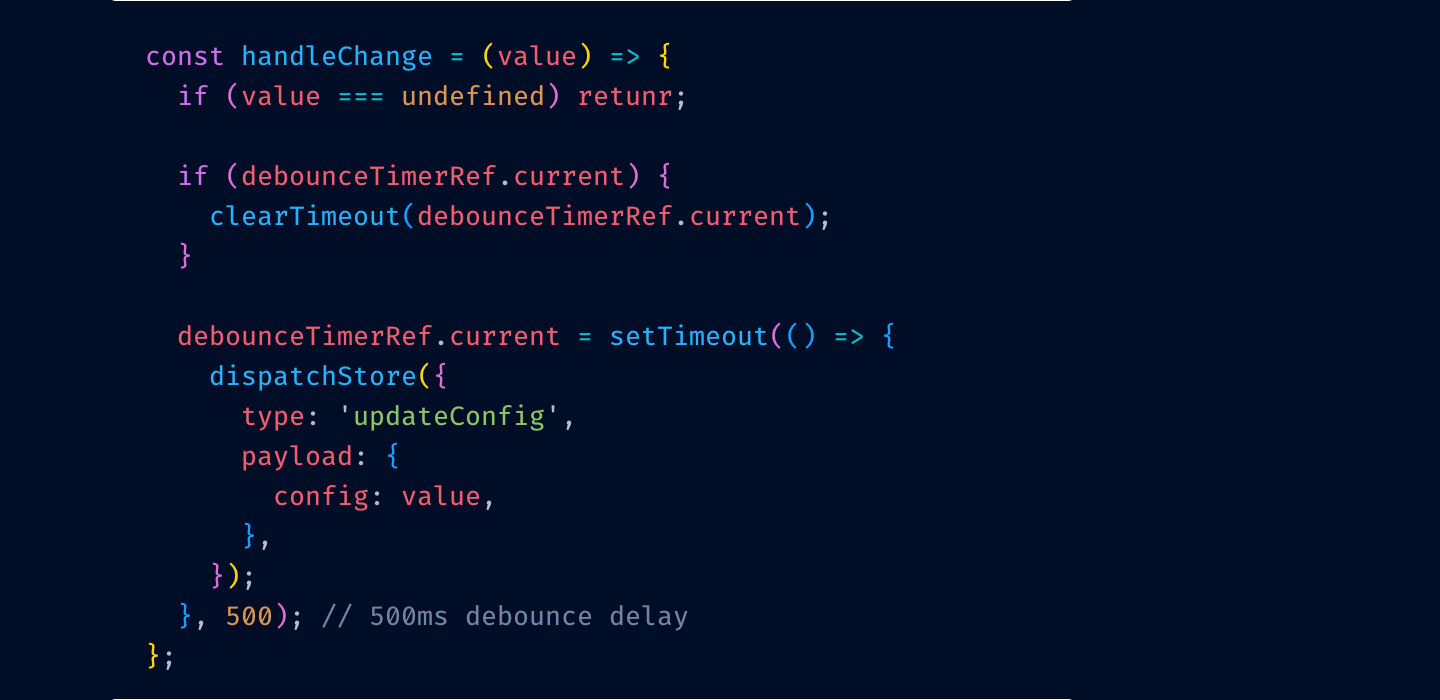

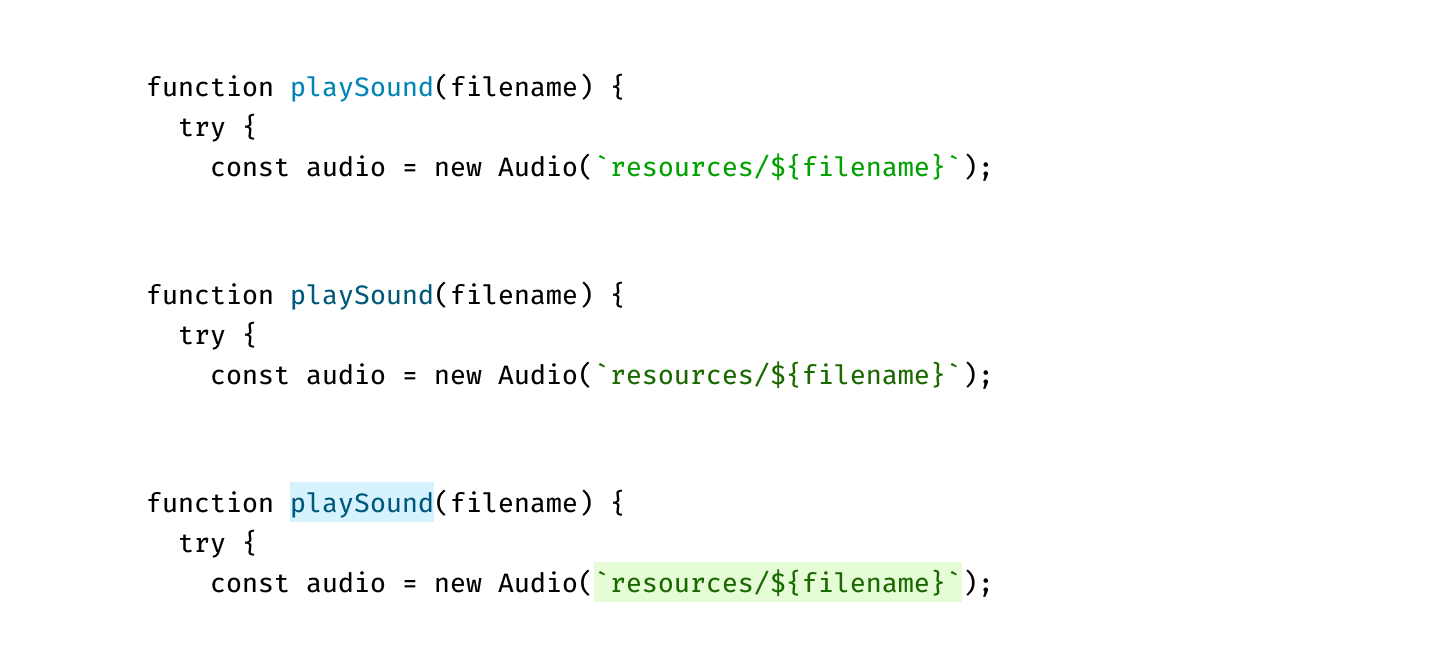

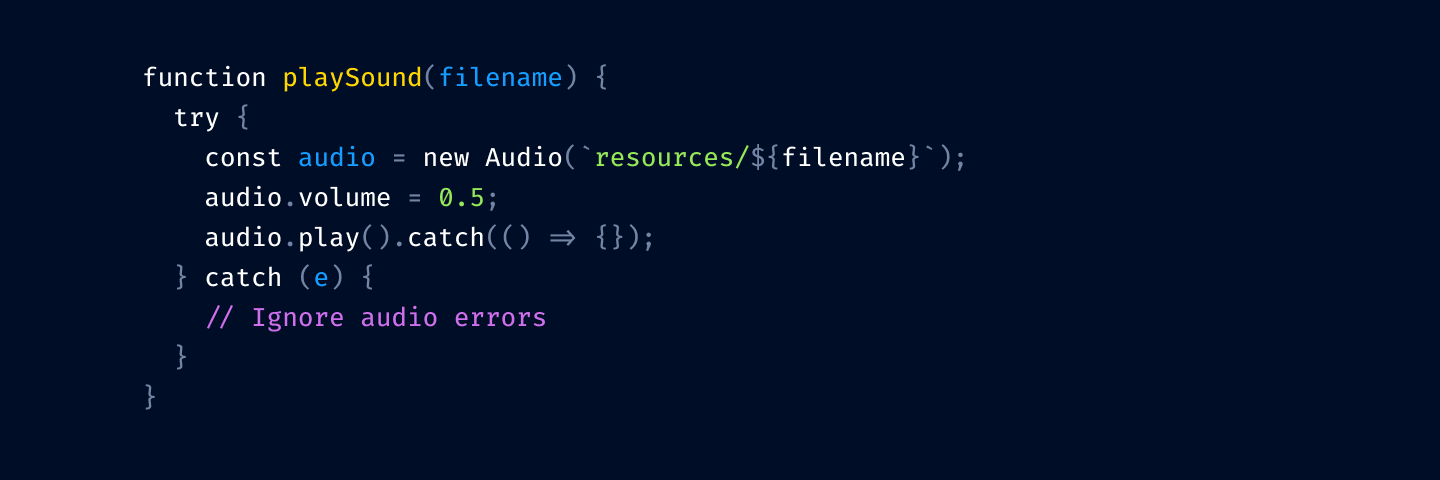

Let me illustrate. Before:

After:

Can you see it? I misspelled return for retunr and its color switched from red to purple.

I can’t.

Here’s another test. Close your eyes (not yet! Finish this sentence first) and try to remember what color your color theme uses for class names?

Can you?

If the answer for both questions is “no”, then your color theme is not functional. It might give you comfort (as in—I feel safe. If it’s highlighted, it’s probably code) but you can’t use it as a tool. It doesn’t help you.

What’s the solution? Have an absolute minimum of colors. So little that they all fit in your head at once. For example, my color theme, Alabaster, only uses four:

That’s it! And I was able to type it all from memory, too. This minimalism allows me to actually do lookups: if I’m looking for a string, I know it will be green. If I’m looking at something yellow, I know it’s a comment.

Limit the number of different colors to what you can remember.

If you swap green and purple in my editor, it’ll be a catastrophe. If somebody swapped colors in yours, would you even notice?

Something there isn’t a lot of. Remember—we want highlights to stand out. That’s why I don’t highlight variables or function calls—they are everywhere, your code is probably 75% variable names and function calls.

I do highlight constants (numbers, strings). These are usually used more sparingly and often are reference points—a lot of logic paths start from constants.

Top-level definitions are another good idea. They give you an idea of a structure quickly.

Punctuation: it helps to separate names from syntax a little bit, and you care about names first, especially when quickly scanning code.

Please, please don’t highlight language keywords. class, function, if, elsestuff like this. You rarely look for them: “where’s that if” is a valid question, but you will be looking not at the if the keyword, but at the condition after it. The condition is the important, distinguishing part. The keyword is not.

Highlight names and constants. Grey out punctuation. Don’t highlight language keywords.

The tradition of using grey for comments comes from the times when people were paid by line. If you have something like

of course you would want to grey it out! This is bullshit text that doesn’t add anything and was written to be ignored.

But for good comments, the situation is opposite. Good comments ADD to the code. They explain something that couldn’t be expressed directly. They are important.

So here’s another controversial idea:

Comments should be highlighted, not hidden away.

Use bold colors, draw attention to them. Don’t shy away. If somebody took the time to tell you something, then you want to read it.

Another secret nobody is talking about is that there are two types of comments:

Most languages don’t distinguish between those, so there’s not much you can do syntax-wise. Sometimes there’s a convention (e.g. -- vs /* */ in SQL), then use it!

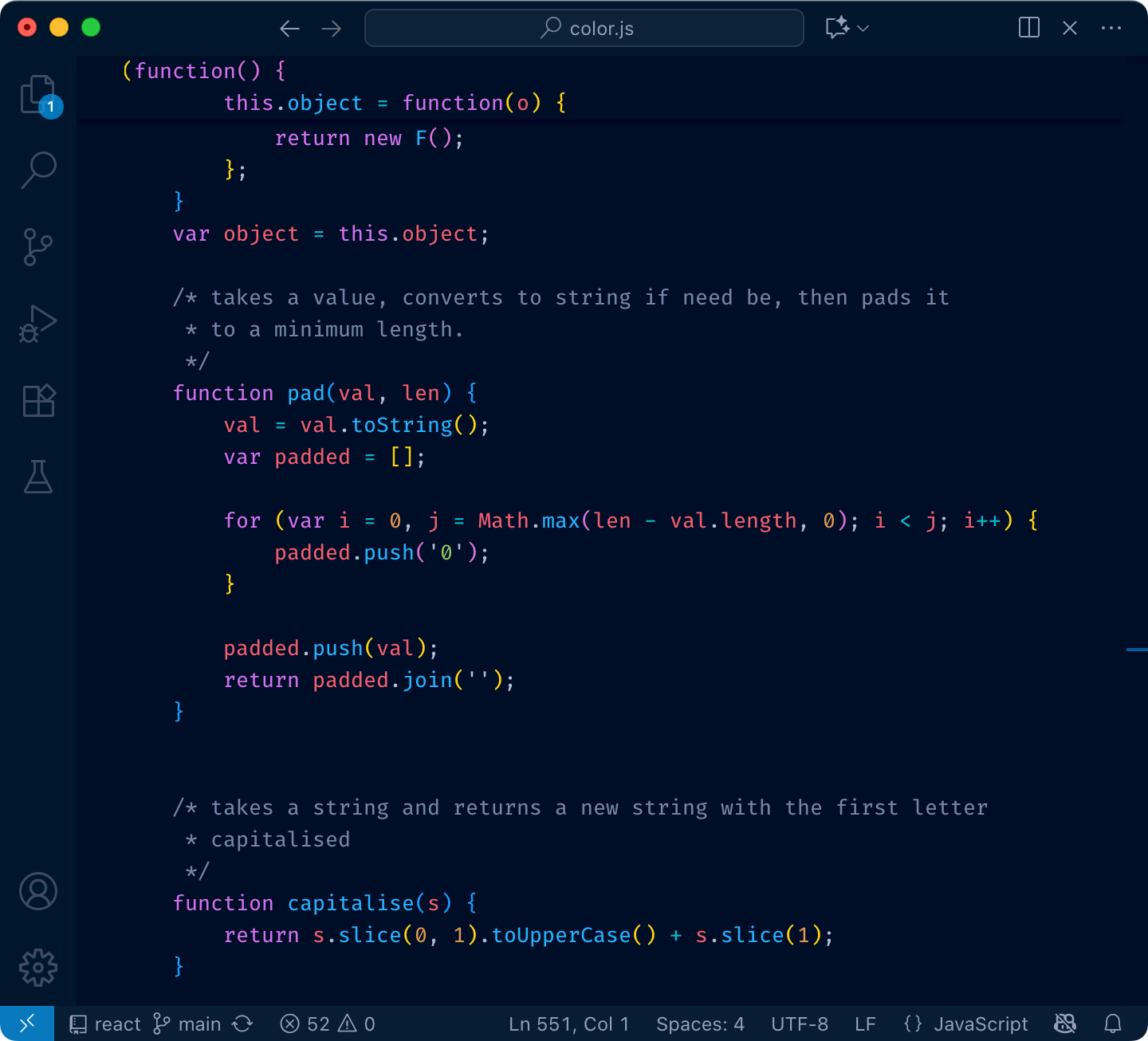

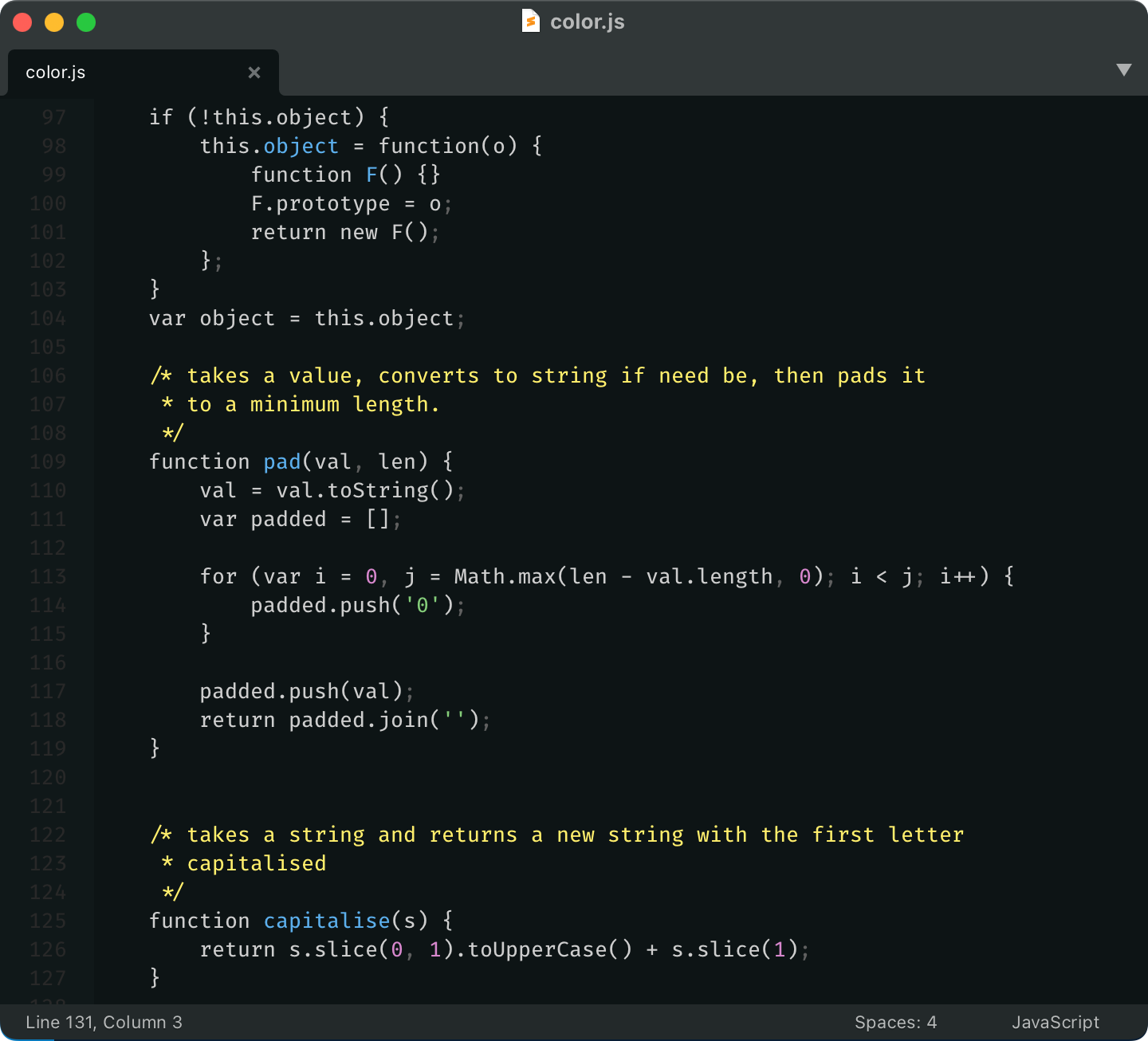

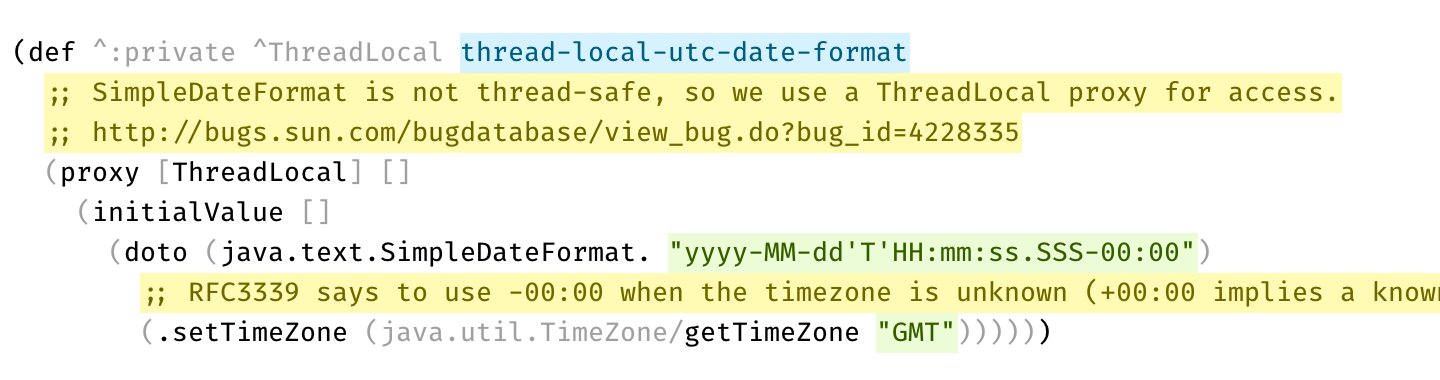

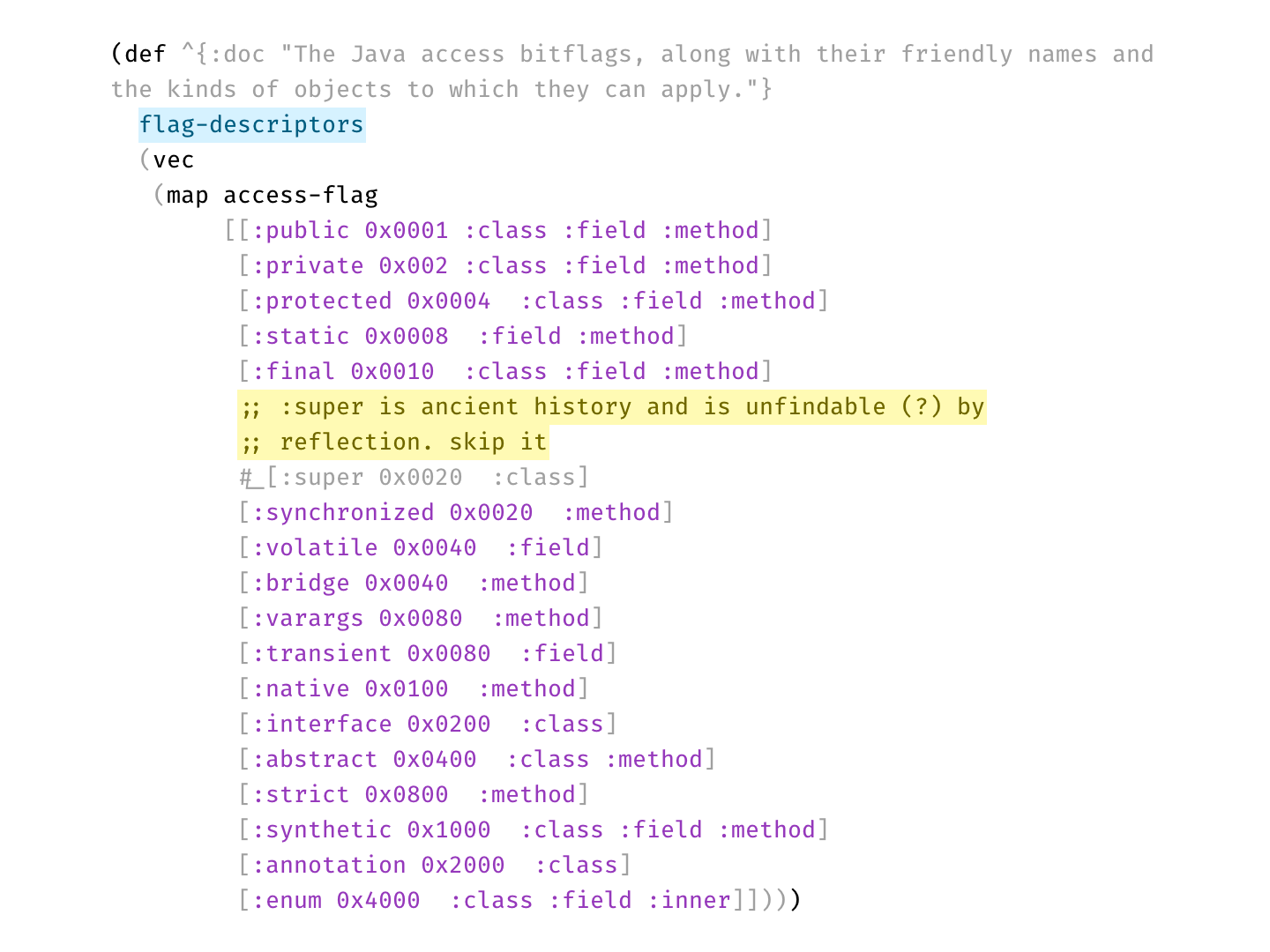

Here’s a real example from Clojure codebase that makes perfect use of two types of comments:

Disabled code is gray, explanation is bright yellow

Disabled code is gray, explanation is bright yellowPer statistics, 70% of developers prefer dark themes. Being in the other 30%, that question always puzzled me. Why?

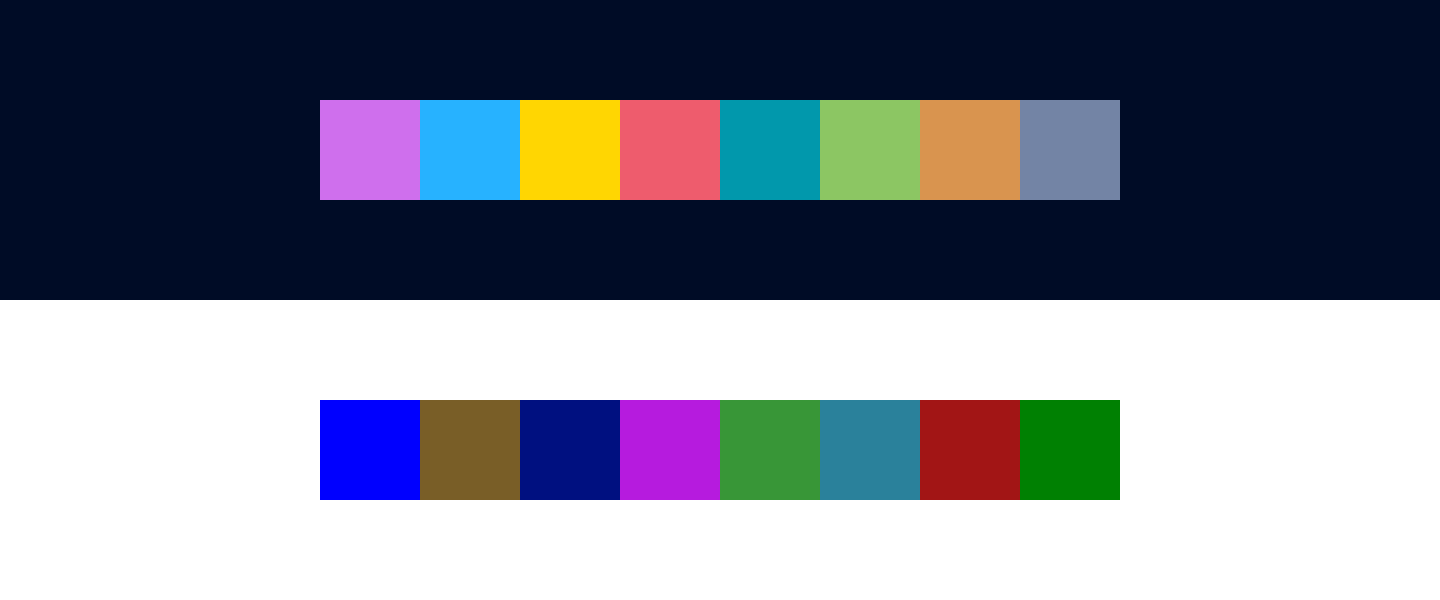

And I think I have an answer. Here’s a typical dark theme:

and here’s a light one:

On the latter one, colors are way less vibrant. Here, I picked them out for you:

Notice how many colors there are. No one can remember that many.

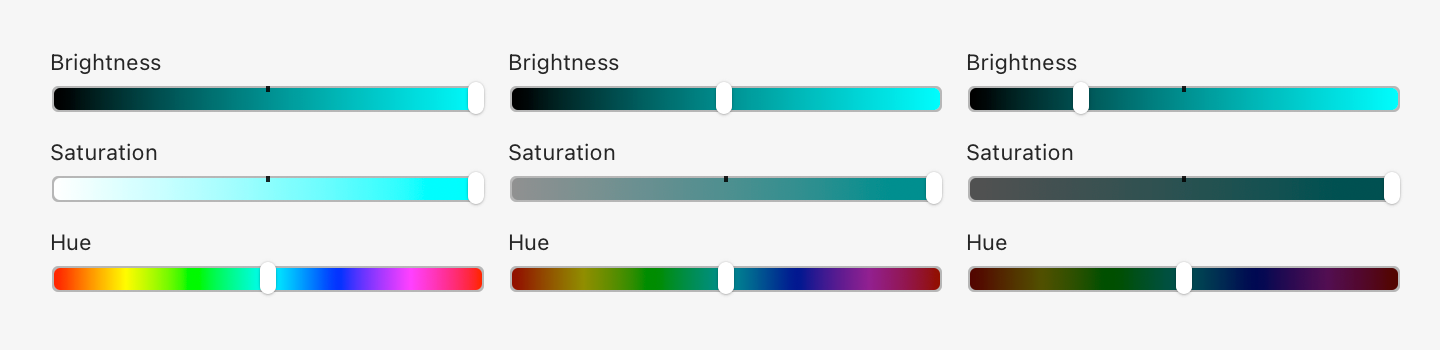

Notice how many colors there are. No one can remember that many.This is because dark colors are in general less distinguishable and more muddy. Look at Hue scale as we move brightness down:

Basically, in the dark part of the spectrum, you just get fewer colors to play with. There’s no “dark yellow” or good-looking “dark teal”.

Nothing can be done here. There are no magic colors hiding somewhere that have both good contrast on a white background and look good at the same time. By choosing a light theme, you are dooming yourself to a very limited, bad-looking, barely distinguishable set of dark colors.

So it makes sense. Dark themes do look better. Or rather: light ones can’t look good. Science ¯\_(ツ)_/¯

But!

But.

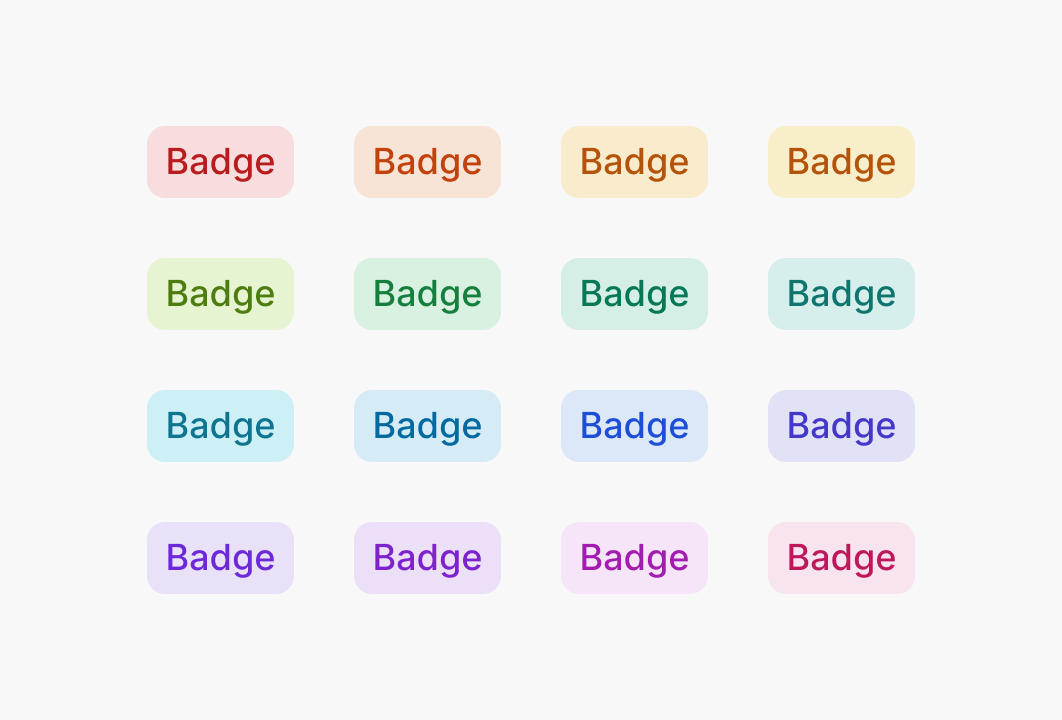

There is one trick you can do, that I don’t see a lot of. Use background colors! Compare:

The first one has nice colors, but the contrast is too low: letters become hard to read.

The second one has good contrast, but you can barely see colors.

The last one has both: high contrast and clean, vibrant colors. Lighter colors are readable even on a white background since they fill a lot more area. Text is the same brightness as in the second example, yet it gives the impression of clearer color. It’s all upside, really.

UI designers know about this trick for a while, but I rarely see it applied in code editors:

If your editor supports choosing background color, give it a try. It might open light themes for you.

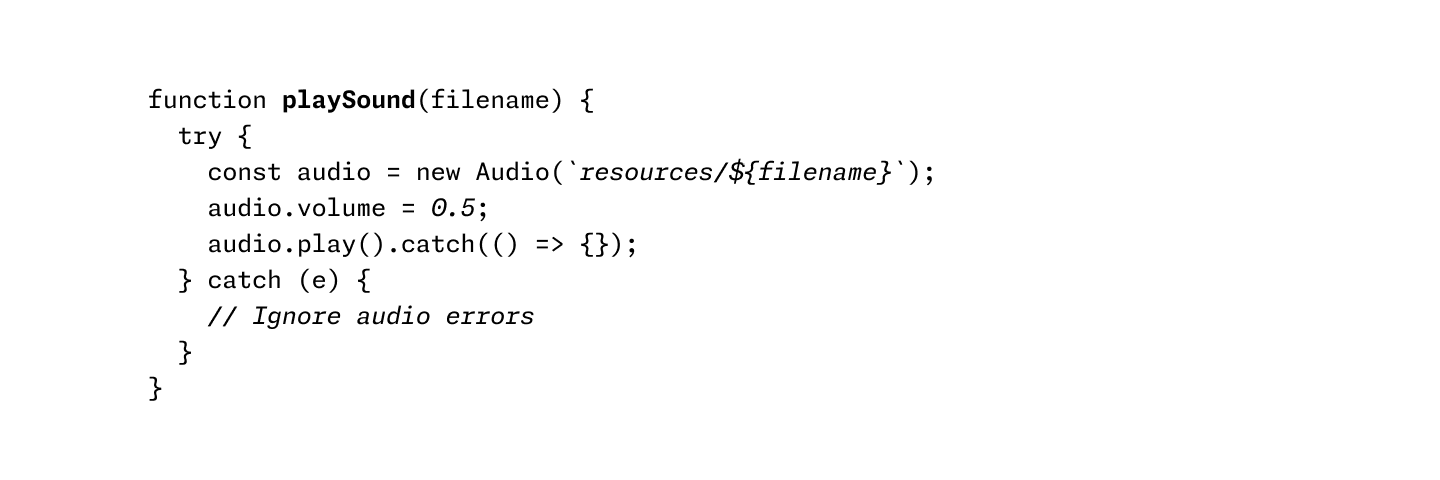

Don’t use. This goes into the same category as too many colors. It’s just another way to highlight something, and you don’t need too many, because you can’t highlight everything.

In theory, you might try to replace colors with typography. Would that work? I don’t know. I haven’t seen any examples.

Using italics and bold instead of colors

Using italics and bold instead of colorsSome themes pay too much attention to be scientifically uniform. Like, all colors have the same exact lightness, and hues are distributed evenly on a circle.

This could be nice (to know if you have OCD), but in practice, it doesn’t work as well as it sounds:

OkLab l=0.7473 c=0.1253 h=0, 45, 90, 135, 180, 225, 270, 315

OkLab l=0.7473 c=0.1253 h=0, 45, 90, 135, 180, 225, 270, 315The idea of highlighting is to make things stand out. If you make all colors the same lightness and chroma, they will look very similar to each other, and it’ll be hard to tell them apart.

Our eyes are way more sensitive to differences in lightness than in color, and we should use it, not try to negate it.

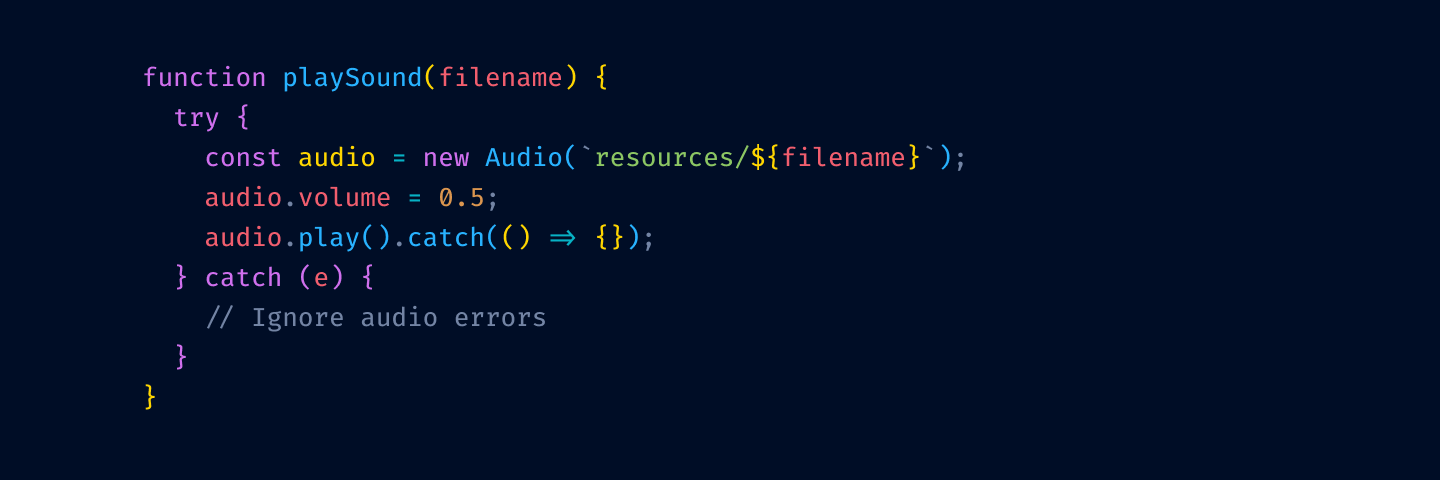

Let’s apply these principles step by step and see where it leads us. We start with the theme from the start of this post:

First, let’s remove highlighting from language keywords and re-introduce base text color:

Next, we remove color from variable usage:

and from function/method invocation:

The thinking is that your code is mostly references to variables and method invocation. If we highlight those, we’ll have to highlight more than 75% of your code.

Notice that we’ve kept variable declarations. These are not as ubiquitous and help you quickly answer a common question: where does thing thing come from?

Next, let’s tone down punctuation:

I prefer to dim it a little bit because it helps names stand out more. Names alone can give you the general idea of what’s going on, and the exact configuration of brackets is rarely equally important.

But you might roll with base color punctuation, too:

Okay, getting close. Let’s highlight comments:

We don’t use red here because you usually need it for squiggly lines and errors.

This is still one color too many, so I unify numbers and strings to both use green:

Finally, let’s rotate colors a bit. We want to respect nesting logic, so function declarations should be brighter (yellow) than variable declarations (blue).

Compare with what we started:

In my opinion, we got a much more workable color theme: it’s easier on the eyes and helps you find stuff faster.

I’ve been applying these principles for about 8 years now.

I call this theme Alabaster and I’ve built it a couple of times for the editors I used:

It’s also been ported to many other editors and terminals; the most complete list is probably here. If your editor is not on the list, try searching for it by name—it might be built-in already! I always wondered where these color themes come from, and now I became an author of one (and I still don’t know).

Feel free to use Alabaster as is or build your own theme using the principles outlined in the article—either is fine by me.

As for the principles themselves, they worked out fantastically for me. I’ve never wanted to go back, and just one look at any “traditional” color theme gives me a scare now.

I suspect that the only reason we don’t see more restrained color themes is that people never really thought about it. Well, this is your wake-up call. I hope this will inspire people to use color more deliberately and to change the default way we build and use color themes.

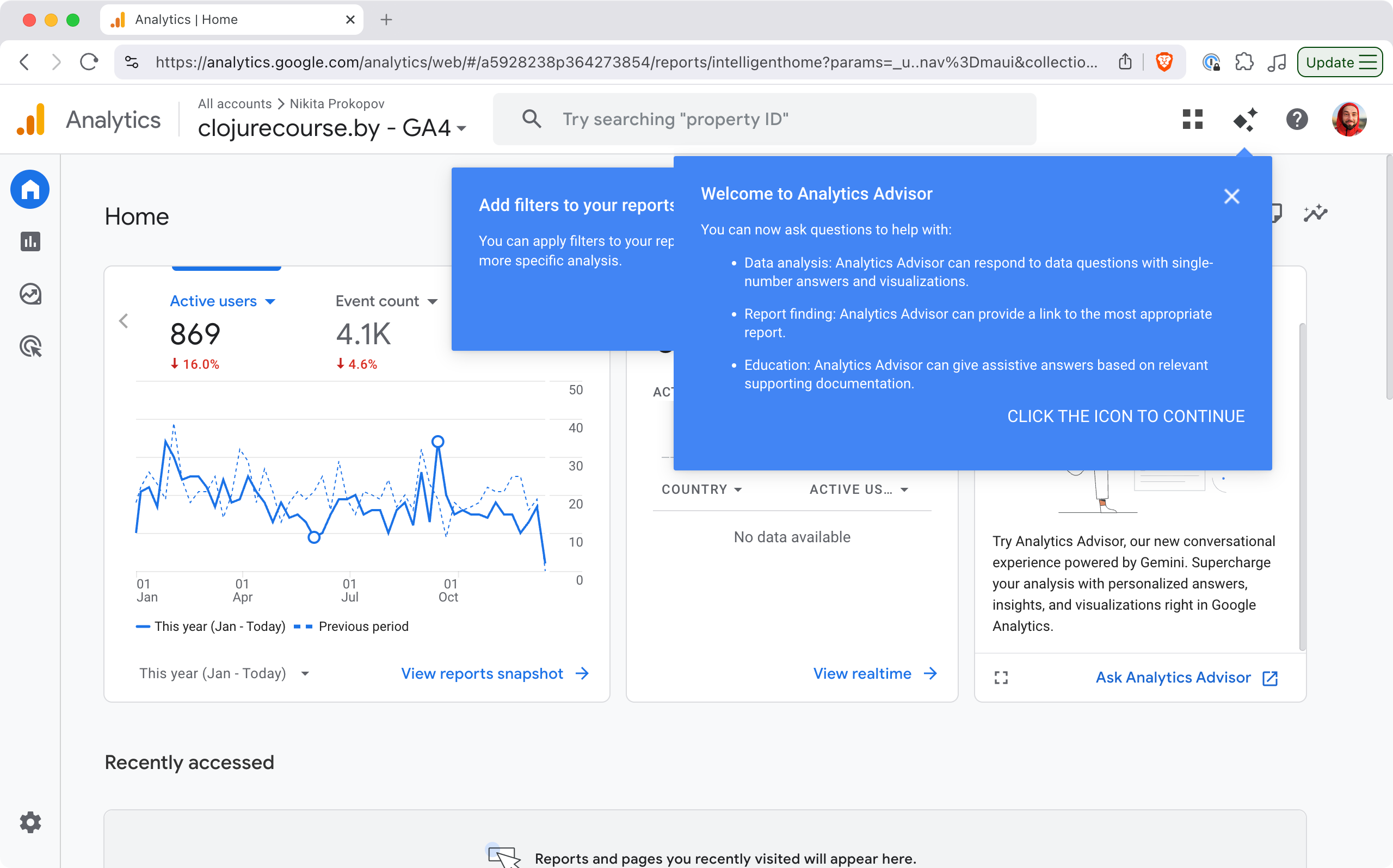

I have a weird relationship with statistics: on one hand, I try not to look at it too often. Maybe once or twice a year. It’s because analytics is not actionable: what difference does it make if a thousand people saw my article or ten thousand?

I mean, sure, you might try to guess people’s tastes and only write about what’s popular, but that will destroy your soul pretty quickly.

On the other hand, I feel nervous when something is not accounted for, recorded, or saved for future reference. I might not need it now, but what if ten years later I change my mind?

Seeing your readers also helps to know you are not writing into the void. So I really don’t need much, something very basic: the number of readers per day/per article, maybe, would be enough.

Final piece of the puzzle: I self-host my web projects, and I use an old-fashioned web server instead of delegating that task to Nginx.

Static sites are popular and for a good reason: they are fast, lightweight, and fulfil their function. I, on the other hand, might have an unfinished gestalt or two: I want to feel the full power of the computer when serving my web pages, to be able to do fun stuff that is beyond static pages. I need that freedom that comes with a full programming language at your disposal. I want to program my own web server (in Clojure, sorry everybody else).

All this led me on a quest for a statistics solution that would uniquely fit my needs. Google Analytics was out: bloated, not privacy-friendly, terrible UX, Google is evil, etc.

What is going on?

What is going on?Some other JS solution might’ve been possible, but still questionable: SaaS? Paid? Will they be around in 10 years? Self-host? Are their cookies GDPR-compliant? How to count RSS feeds?

Nginx has access logs, so I tried server-side statistics that feed off those (namely, Goatcounter). Easy to set up, but then I needed to create domains for them, manage accounts, monitor the process, and it wasn’t even performant enough on my server/request volume!

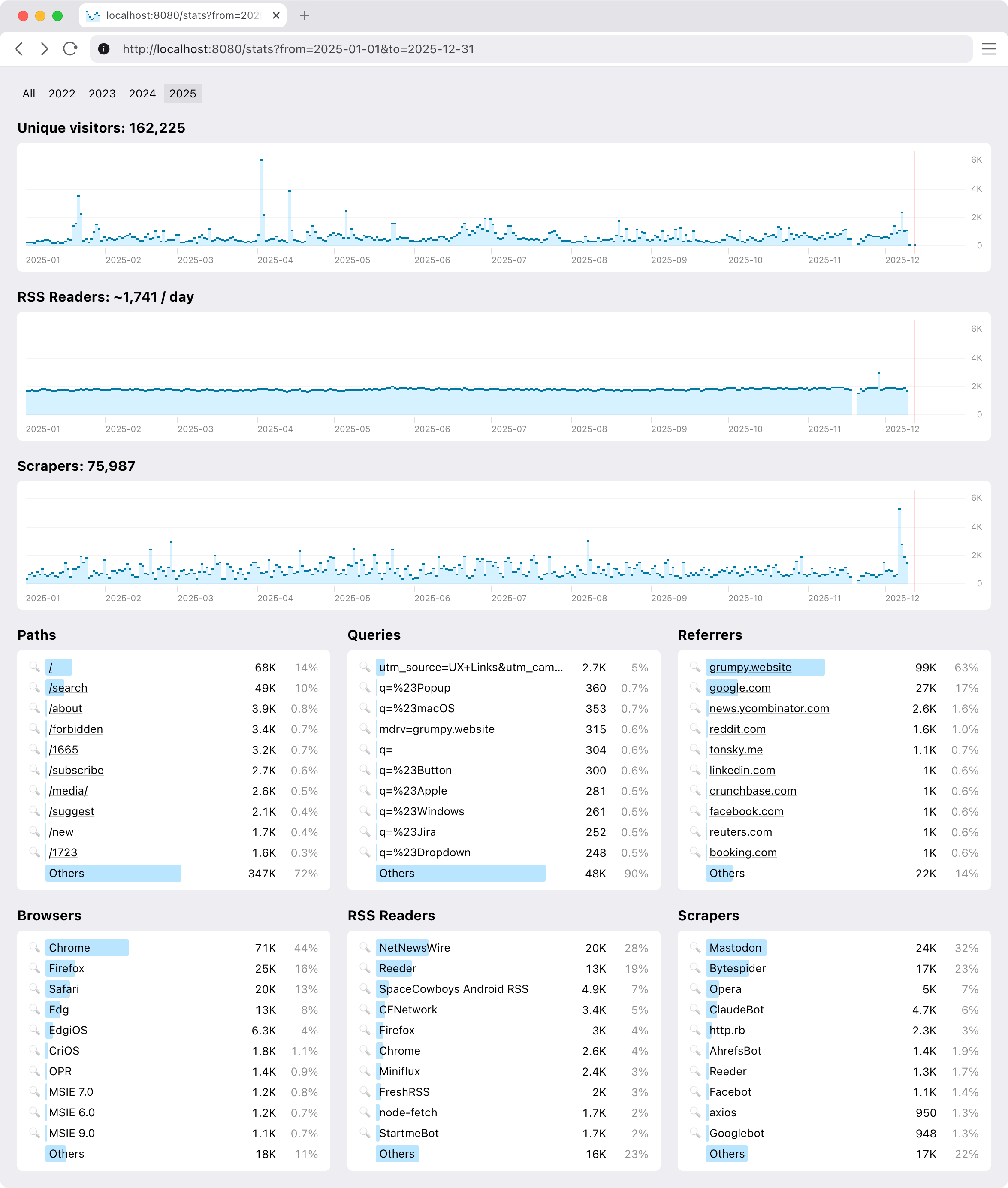

So I ended up building my own. You are welcome to join, if your constraints are similar to mine. This is how it looks:

It’s pretty basic, but does a few things that were important to me.

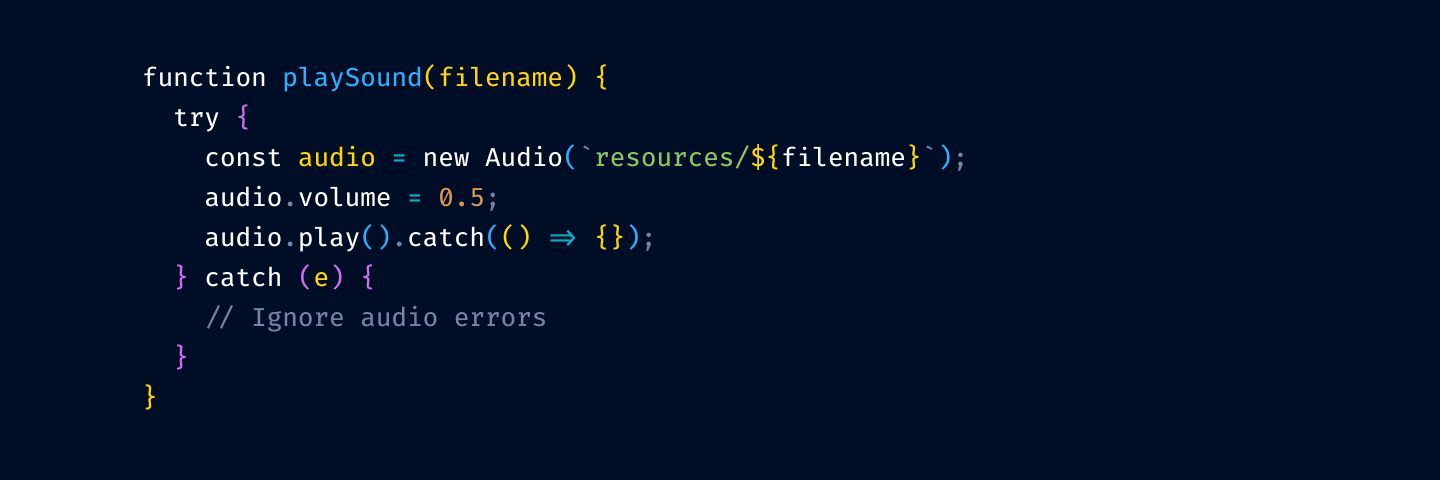

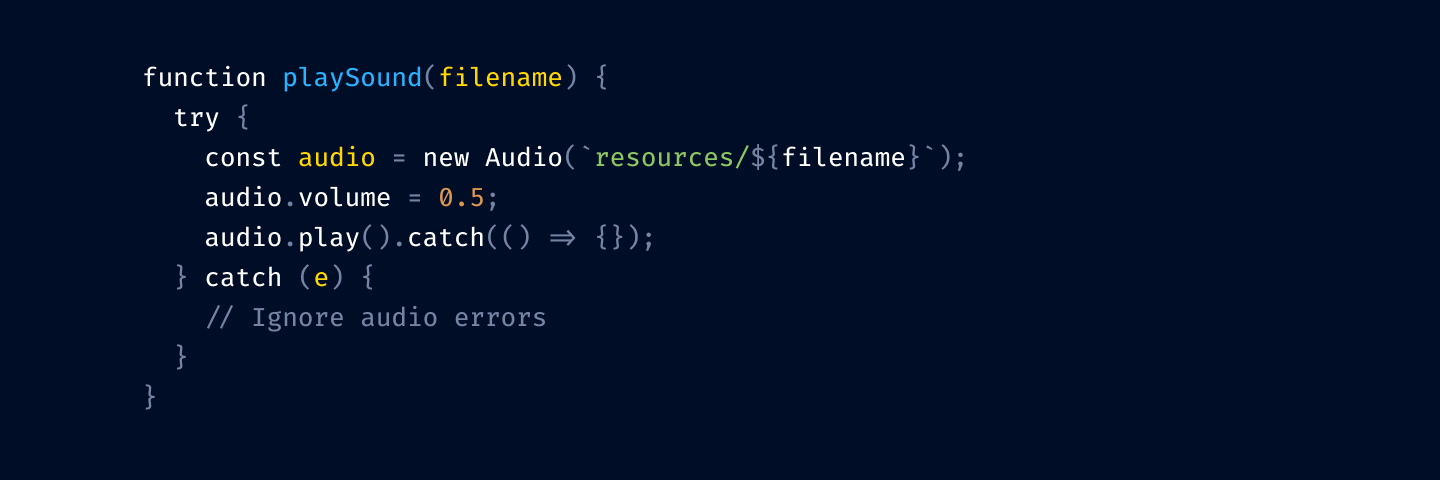

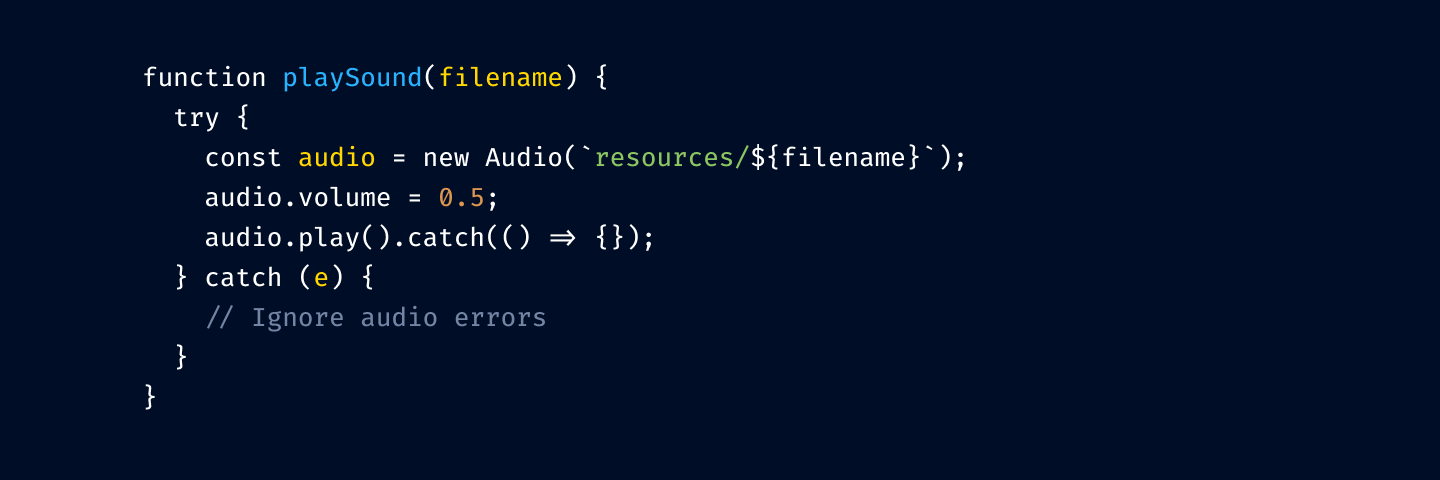

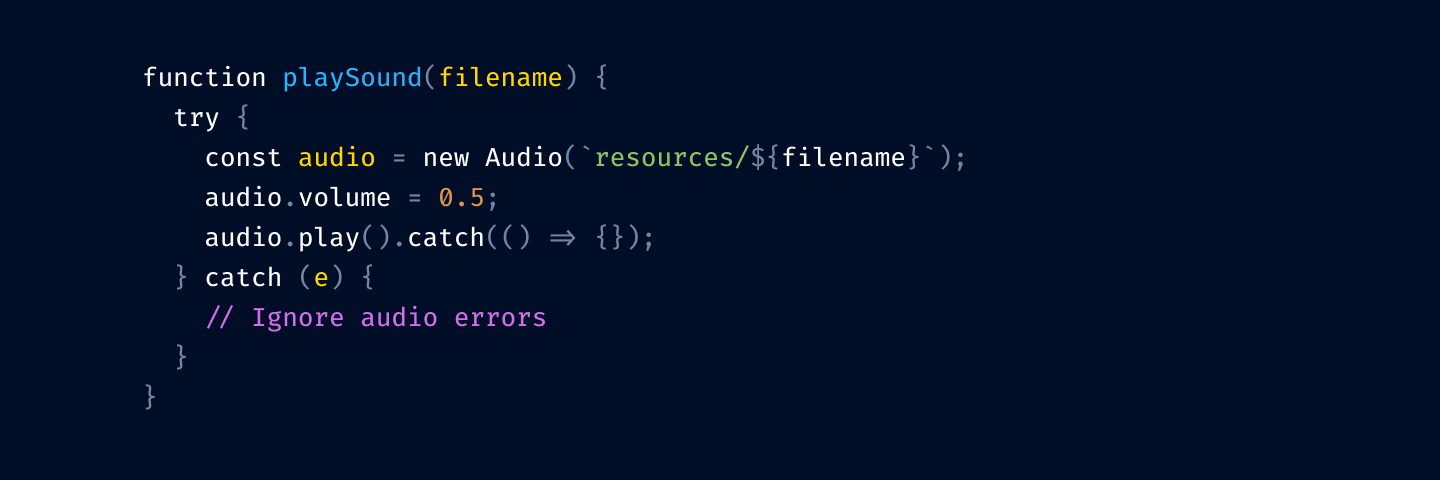

Extremely easy to set up. And I mean it as a feature.

Just add our middleware to your Ring stack and get everything automatically: collecting and reporting.

(def app

(-> routes

...

(ring.middleware.params/wrap-params)

(ring.middleware.cookies/wrap-cookies)

...

(clj-simple-stats.core/wrap-stats))) ;; <-- just add thisIt’s zero setup in the best sense: nothing to configure, nothing to monitor, minimal dependency. It starts to work immediately and doesn’t ask anything from you, ever.

See, you already have your web server, why not reuse all the setup you did for it anyway?

We distinguish between request types. In my case, I am only interested in live people, so I count them separately from RSS feed requests, favicon requests, redirects, wrong URLs, and bots. Bots are particularly active these days. Gotta get that AI training data from somewhere.

RSS feeds are live people in a sense, so extra work was done to count them properly. Same reader requesting feed.xml 100 times in a day will only count as one request.

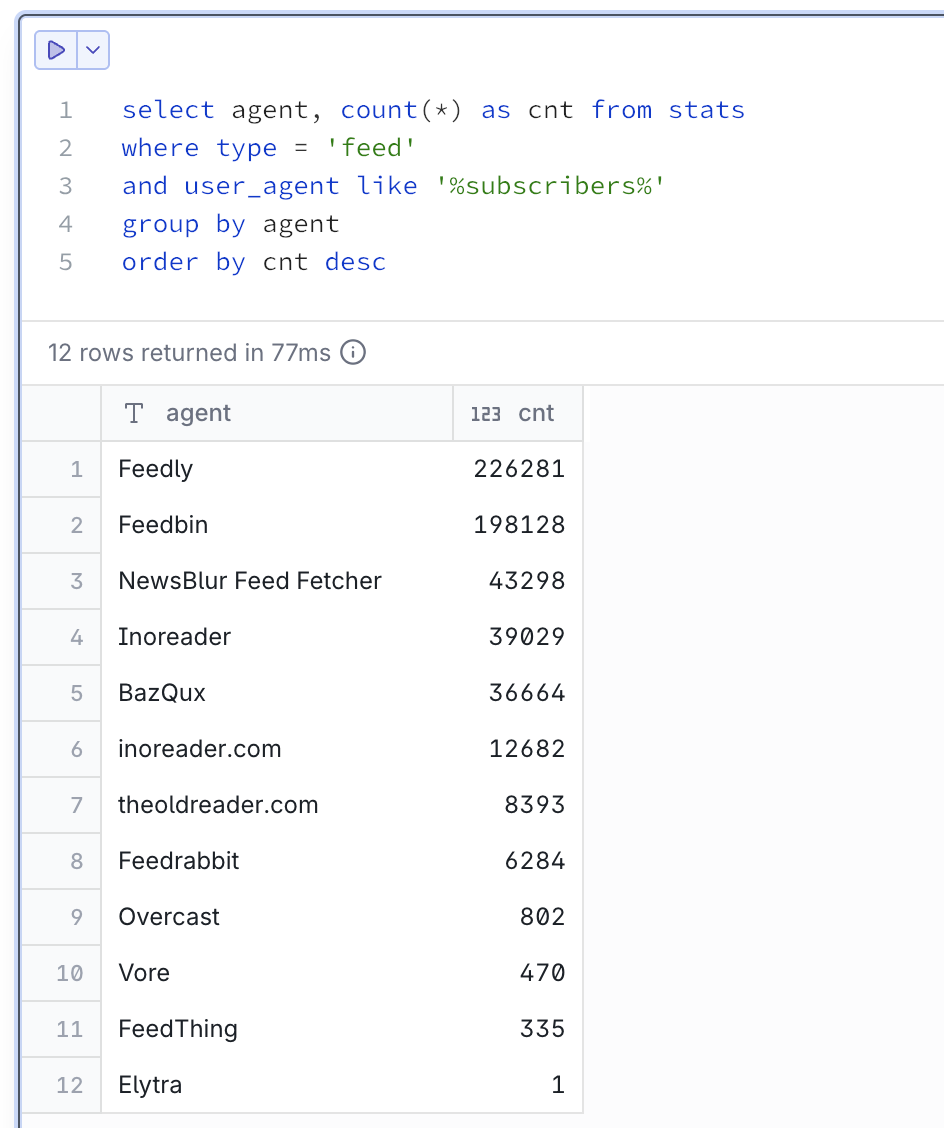

Hosted RSS readers often report user count in User-Agent, like this:

Feedly/1.0 (+http://www.feedly.com/fetcher.html; 457 subscribers; like FeedFetcher-Google)

Mozilla/5.0 (compatible; BazQux/2.4; +https://bazqux.com/fetcher; 6 subscribers)

Feedbin feed-id:1373711 - 142 subscribersMy personal respect and thank you to everybody on this list. I see you.

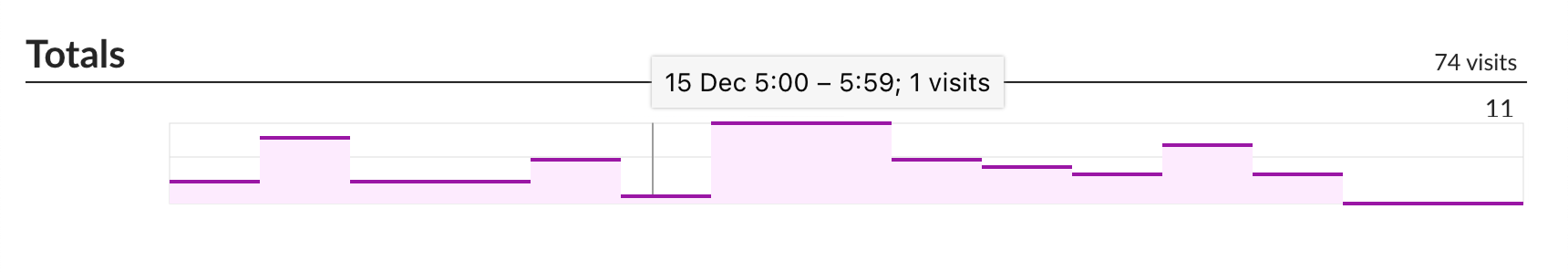

Visualization is important, and so is choosing the correct graph type. This is wrong:

Continuous line suggests interpolation. It reads like between 1 visit at 5am and 11 visits at 6am there were points with 2, 3, 5, 9 visits in between. Maybe 5.5 visits even! That is not the case.

This is how a semantically correct version of that graph should look:

Some attention was also paid to having reasonable labels on axes. You won’t see something like 117, 234, 10875. We always choose round numbers appropriate to the scale: 100, 200, 500, 1K etc.

Goes without saying that all graphs have the same vertical scale and syncrhonized horizontal scroll.

We don’t offer much (as I don’t need much), but you can narrow reports down by page, query, referrer, user agent, and any date slice.

It would be nice to have some insights into “What was this spike caused by?”

Some basic breakdown by country would be nice. I do have IP addresses (for what they are worth), but I need a way to package GeoIP into some reasonable size (under 1 Mb, preferably; some loss of resolution is okay).

Finally, one thing I am really interested in is “Who wrote about me?” I do have referrers, only question is how to separate signal from noise.

Performance. DuckDB is a sport: it compresses data and runs column queries, so storing extra columns per row doesn’t affect query performance. Still, each dashboard hit is a query across the entire database, which at this moment (~3 years of data) sits around 600 MiB. I definitely need to look into building some pre-calculated aggregates.

One day.

Head to github.com/tonsky/clj-simple-stats and follow the instructions:

Let me know what you think! Is it usable to you? What could be improved?

Greetings folks!

Clojurists Together is pleased to announce that we are opening our Q2 2026 funding round for Clojure Open Source Projects. Applications will be accepted through the 19th of March 2026 (midnight Pacific Time). We are looking forward to reviewing your proposals! More information and the application can be found here.

We will be awarding up to $33,000 USD for a total of 5-7 projects. The $2k funding tier is for experimental projects or smaller proposals, whereas the $9k tier is for those that are more established. Projects generally run 3 months, however, the $9K projects can run between 3 and 12 months as needed. We expect projects to start at the beginning of April 2026.

A BIG THANKS to all our members for your continued support. We also want to encourage you to reach out to your colleagues and companies to join Clojurists Together so that we can fund EVEN MORE great projects throughout the year.

We surveyed the community in February to find out what what issues were top of mind and types of initiatives they would like us to focus on for this round of funding. As always, there were a lot of ideas and we hope they will be useful in informing your project proposals.

A number of themes appeared in the survey results.

The biggest theme, by far, was related to adoption and growth of Clojure. Respondents repeatedly mentioned that Clojure is niche, and although they are happy with Clojure, that makes it harder to justify for projects, to find employment, and to persuade others than it is for more popular languages. Respondents want a larger community and wider adoption. In particular, they want more public advocacy for Clojure, including videos, tutorials, public success stories, starter projects, and outreach in general.

Another major theme was AI. Respondents were concerned about AI coding assistants being perceived as having weak Clojure support, and they expressed frustration that Python is perceived as the safe choice for AI despite how well Clojure works with AI tooling. Nonetheless, respondents would like to see more work on tooling, guides, and resources for using AI with Clojure.

ClojureScript and JavaScript interop received the most specific attention. Respondents want CLJS/Cherry/Skittle to provide frictionless support for modern JavaScript standards (ES6 and ESM) and would like an overall simplification of the build and run process.

Developer experience issues came up a number of times, including: confusing error messages, poor documentation, and under-supported libraries. Improvements to any of those would be welcome.

Difficulty finding Clojure employment was another recurring theme. Respondents were not sure how to solve it, but suggested a community job board might be helpful.

Adoption:

AI/LLMs

Employment

Developer Experience

Greetings folks!

Clojurists Together is pleased to announce that we are opening our Q2 2026 funding round for Clojure Open Source Projects. Applications will be accepted through the 19th of March 2026 (midnight Pacific Time). We are looking forward to reviewing your proposals! More information and the application can be found here.

We will be awarding up to $33,000 USD for a total of 5-7 projects. The $2k funding tier is for experimental projects or smaller proposals, whereas the $9k tier is for those that are more established. Projects generally run 3 months, however, the $9K projects can run between 3 and 12 months as needed. We expect projects to start at the beginning of April 2026.

A BIG THANKS to all our members for your continued support. We also want to encourage you to reach out to your colleagues and companies to join Clojurists Together so that we can fund EVEN MORE great projects throughout the year.

We surveyed the community in February to find out what what issues were top of mind and types of initiatives they would like us to focus on for this round of funding. As always, there were a lot of ideas and we hope they will be useful in informing your project proposals.

A number of themes appeared in the survey results.

The biggest theme, by far, was related to adoption and growth of Clojure. Respondents repeatedly mentioned that Clojure is niche, and although they are happy with Clojure, that makes it harder to justify for projects, to find employment, and to persuade others than it is for more popular languages. Respondents want a larger community and wider adoption. In particular, they want more public advocacy for Clojure, including videos, tutorials, public success stories, starter projects, and outreach in general.

Another major theme was AI. Respondents were concerned about AI coding assistants being perceived as having weak Clojure support, and they expressed frustration that Python is perceived as the safe choice for AI despite how well Clojure works with AI tooling. Nonetheless, respondents would like to see more work on tooling, guides, and resources for using AI with Clojure.

ClojureScript and JavaScript interop received the most specific attention. Respondents want CLJS/Cherry/Skittle to provide frictionless support for modern JavaScript standards (ES6 and ESM) and would like an overall simplification of the build and run process.

Developer experience issues came up a number of times, including: confusing error messages, poor documentation, and under-supported libraries. Improvements to any of those would be welcome.

Difficulty finding Clojure employment was another recurring theme. Respondents were not sure how to solve it, but suggested a community job board might be helpful.

Adoption:

AI/LLMs

Employment

Developer Experience

Good news, everyone! clojure-mode 5.22 is out with many small improvements and bug fixes!

While TreeSitter is the future of Emacs major modes, the present remains a bit

more murky – not everyone is running a modern Emacs or an Emacs built with

TreeSitter support, and many people have asked that “classic” major modes

continue to be improved and supported alongside the newer TS-powered modes (in

our case – clojure-ts-mode).

Your voices have been heard! On Bulgaria’s biggest national holiday (Liberation

Day), you can feel liberated from any worries about the future of

clojure-mode, as it keeps getting the love and attention that it deserves!

Looking at the changelog – 5.22 is one of the biggest releases in the last few

years and I hope you’ll enjoy it!1

Now let me walk you through some of the highlights.

edn-mode has always been the quiet sibling of clojure-mode – a mode for

editing EDN files that was more of an afterthought than a first-class citizen.

That changed with 5.21 and the trend continues in 5.22. The mode now has its own

dedicated keymap with data-appropriate bindings, meaning it no longer inherits

code refactoring commands that make no sense outside of Clojure source

files. Indentation has also been corrected – paren lists in EDN are now treated

as data (which they are), not as function calls.

Small things, sure, but they add up to a noticeably better experience when you’re editing config files, test fixtures, or any other EDN data.

Font-locking has been updated to reflect Clojure 1.12’s additions – new

built-in dynamic variables and core functions are now properly highlighted. The

optional clojure-mode-extra-font-locking package covers everything from 1.10

through 1.12, including bundled namespaces and clojure.repl forms.2 Some

obsolete entries (like specify and specify!) have been cleaned up as well.

On a related note, protocol method docstrings now correctly receive

font-lock-doc-face styling, and letfn binding function names get proper

font-lock-function-name-face treatment. These are the kind of small

inconsistencies that you barely notice until they’re fixed, and then you wonder

how you ever lived without them.

A new clojure-discard-face has been added for #_ reader discard forms. By

default it inherits from the comment face, so discarded forms visually fade into

the background – exactly what you’d expect from code that won’t be read. Of

course, you can customize the face to your liking.

A few fixes that deserve a special mention:

clojure-sort-ns no longer corrupts non-sortable forms – previously,

sorting a namespace that contained :gen-class could mangle it. That’s fixed

now.clojure-thread-last-all and line comments – the threading refactoring

command was absorbing closing parentheses into line comments. Not anymore.clojure-update-ns works again – this one had been quietly broken and is

now restored to full functionality.clojure-add-arity preserves arglist metadata – when converting from

single-arity to multi-arity, metadata on the argument vector is no longer

lost.So, what’s actually next for clojure-mode? The short answer is: more of the

same. clojure-mode will continue to receive updates, bug fixes, and

improvements for the foreseeable future. There is no rush for anyone to switch

to clojure-ts-mode, and no plans to deprecate the classic mode anytime soon.

That said, if you’re curious about clojure-ts-mode, its main advantage right

now is performance. TreeSitter-based font-locking and indentation are

significantly faster than the regex-based approach in clojure-mode. If you’re

working with very large Clojure files and noticing sluggishness, it’s worth

giving clojure-ts-mode a try. My guess is that most people won’t notice a

meaningful difference in everyday editing, but your mileage may vary.

The two modes will coexist for as long as it makes sense. Use whichever one works best for you – they’re both maintained by the same team (yours truly and co) and they both have a bright future ahead of them. At least I hope so!

As usual - big thanks to everyone supporting my Clojure OSS work, especially the members of Clojurists Together! You rock!

That’s all I have for you today. Keep hacking!

This is a complete Maven-first Clojure/Java interop application. It details how to create a Maven application, enrich it with clojure code, call into clojure from Java, and hook up the entry points for both Java and Clojure within the same project.

Further, it contains my starter examples of using the fantastic Incanter Statistical and Graphics Computing Library in clojure. I include both a pom.xml and a project.clj showing how to pull in the dependencies.

The outcome is a consistent maven-archetyped project, wherein maven and leiningen play nicely together. This allows the best of both ways to be applied together. For the emacs user, I include support for cider and swank. NRepl by itself is present for general purpose use as well.

follow these steps

mvn archetype:generate -DgroupId=com.mycompany.app -DartifactId=my-app -DarchetypeArtifactId=maven-archetype-quickstart -DinteractiveMode=false cd my-app mvn package java -cp target/my-app-1.0-SNAPSHOT.jar com.mycompany.app.App

Hello World

Create a clojure core file

mkdir -p src/main/clojure/com/mycompany/app touch src/main/clojure/com/mycompany/app/core.clj

Give it some goodness…

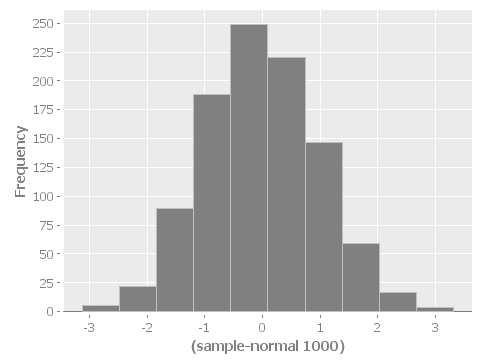

(ns com.mycompany.app.core (:gen-class) (:use (incanter core stats charts))) (defn -main [& args] (println "Hello Clojure!") (println "Java main called clojure function with args: " (apply str (interpose " " args)))) (defn run [] (view (histogram (sample-normal 1000))))

Notice that we’ve added in the Incanter Library and made a run function to pop up a histogram of sample data

<dependencies> <dependency> <groupId>org.clojure</groupId> <artifactId>clojure</artifactId> <version>1.7.0</version> </dependency> <dependency> <groupId>org.clojure</groupId> <artifactId>clojure-contrib</artifactId> <version>1.2.0</version> </dependency> <dependency> <groupId>incanter</groupId> <artifactId>incanter</artifactId> <version>1.9.0</version> </dependency> <dependency> <groupId>org.clojure</groupId> <artifactId>tools.nrepl</artifactId> <version>0.2.10</version> </dependency> <!-- pick your poison swank or cider. just make sure the version of nRepl matches. --> <dependency> <groupId>cider</groupId> <artifactId>cider-nrepl</artifactId> <version>0.10.0-SNAPSHOT</version> </dependency> <dependency> <groupId>swank-clojure</groupId> <artifactId>swank-clojure</artifactId> <version>1.4.3</version> </dependency> </dependencies>

Modify your java main to call your clojure main like in the following:

package com.mycompany.app;

// for clojure's api

import clojure.lang.IFn;

import clojure.java.api.Clojure;

// for my api

import clojure.lang.RT;

public class App

{

public static void main( String[] args )

{

System.out.println("Hello Java!" );

try {

// running my clojure code

RT.loadResourceScript("com/mycompany/app/core.clj");

IFn main = RT.var("com.mycompany.app.core", "main");

main.invoke(args);

// running the clojure api

IFn plus = Clojure.var("clojure.core", "+");

System.out.println(plus.invoke(1, 2).toString());

} catch(Exception e) {

e.printStackTrace();

}

}

}

You should add in these plugins to your pom.xml

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

<archive>

<manifest>

<!-- use clojure main -->

<!-- <mainClass>com.mycompany.app.core</mainClass> -->

<!-- use java main -->

<mainClass>com.mycompany.app.App</mainClass>

</manifest>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

Add this plugin to give your project the mvn: clojure:… commands

A full list of these is posted later in this article.

<plugin>

<groupId>com.theoryinpractise</groupId>

<artifactId>clojure-maven-plugin</artifactId>

<version>1.7.1</version>

<configuration>

<mainClass>com.mycompany.app.core</mainClass>

</configuration>

<executions>

<execution>

<id>compile-clojure</id>

<phase>compile</phase>

<goals>

<goal>compile</goal>

</goals>

</execution>

<execution>

<id>test-clojure</id>

<phase>test</phase>

<goals>

<goal>test</goal>

</goals>

</execution>

</executions>

</plugin>

Add Java version targeting

This is always good to have if you are working against multiple versions of Java.

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.3</version>

<configuration><source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

Add this plugin to give your project the mvn exec:… commands

The maven-exec-plugin is nice for running your project from the commandline, build scripts, or from inside an IDE.

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>1.4.0</version>

<executions>

<execution>

<goals>

<goal>exec</goal>

</goals>

</execution>

</executions>

<configuration>

<mainClass>com.mycompany.app.App</mainClass>

</configuration>

</plugin>

With this plugin you can manipulate the manifest of your default package. In this case, I’m not adding a main, because I’m using the uberjar above with all the dependencies for that. However, I included this section for cases, where the use case is for a non-stand-alone assembly.

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>2.6</version>

<configuration>

<archive>

<manifest>

<!-- use clojure main -->

<!-- <mainClass>com.mycompany.app.core</mainClass> -->

<!-- use java main -->

<!-- <mainClass>com.mycompany.app.App</mainClass> -->

</manifest>

</archive>

</configuration>

</plugin>

mvn package

java -cp target/my-app-1.0-SNAPSHOT-jar-with-dependencies.jar com.mycompany.app.App

java -cp target/my-app-1.0-SNAPSHOT-jar-with-dependencies.jar com.mycompany.app.core

java -jar target/my-app-1.0-SNAPSHOT-jar-with-dependencies.jar

mvn exec:java

mvn exec:java -Dexec.mainClass="com.mycompany.app.App"

mvn exec:java -Dexec.mainClass="com.mycompany.app.core"

-Dexec.args="foo"

mvn clojure:run

mkdir src/test/clojure/com/mycompany/app touch src/test/clojure/com/mycompany/app/core_test.clj

Add the following content:

(ns com.mycompany.app.core-test (:require [clojure.test :refer :all] [com.mycompany.app.core :refer :all])) (deftest a-test (testing "Rigourous Test :-)" (is (= 0 0))))

mvn clojure:test

Or

mvn clojure:test-with-junit

Here is the full set of options available from the clojure-maven-plugin:

mvn ... clojure:add-source clojure:add-test-source clojure:compile clojure:test clojure:test-with-junit clojure:run clojure:repl clojure:nrepl clojure:swank clojure:nailgun clojure:gendoc clojure:autodoc clojure:marginalia

See documentation:

touch project.clj

Add this content

(defproject my-sandbox "1.0-SNAPSHOT"

:description "My Encanter Project"

:url "http://joelholder.com"

:license {:name "Eclipse Public License"

:url "http://www.eclipse.org/legal/epl-v10.html"}

:dependencies [[org.clojure/clojure "1.7.0"]

[incanter "1.9.0"]]

:main com.mycompany.app.core

:source-paths ["src/main/clojure"]

:java-source-paths ["src/main/java"]

:test-paths ["src/test/clojure"]

:resource-paths ["resources"]

:aot :all)

Note that we’ve set the source code and test paths for both java and clojure to match the maven-way of doing this.

This gives us a consistent way of hooking the code from both lein and mvn. Additionally, I’ve added the incanter library here. The dependency should be expressed in the project file, because when we run nRepl from this directory, we want it to be available in our namespace, i.e. com.mycompany.app.core

lein run

lein test

This blog entry was exported to html from the README.org of this project. It sits in the base directory of the project. By using it to describe the project and include executable blocks of code from the project itself, we’re able to provide working examples of how to use the library in it’s documentation. People can simply clone our project and try out the library by executing it’s documentation. Very nice..

Make sure you jack-in to cider first:

M-x cider-jack-in (Have it mapped to F9 in my emacs)

The Clojure code block

#+begin_src clojure :tangle ./src/main/clojure/com/mycompany/app/core.clj :results output (-main) (run) #+end_src

Blocks are run in org-mode with C-c C-c

(-main) (run)

Hello Clojure! Java main called clojure function with args:

Note that we ran both our main and run functions here. -main prints out the text shown above. The run function actually opens the incanter java image viewer and shows us a picture of our graph.

I have purposefully not invested in styling these graphs in order to keep the code examples simple and focussed, however incanter makes really beautiful output. Here’s a link to get you started:

(use '(incanter core charts pdf)) ;;; Create the x and y data: (def x-data [0.0 1.0 2.0 3.0 4.0 5.0]) (def y-data [2.3 9.0 2.6 3.1 8.1 4.5]) (def xy-line (xy-plot x-data y-data)) (view xy-line) (save-pdf xy-line "img/incanter-xy-line.pdf") (save xy-line "img/incanter-xy-line.png")

Finally here are some resources to move you along the journey. I drew on the links cited below along with a night of hacking to arrive a nice clean interop skeleton. Feel free to use my code available here:

https://github.com/jclosure/my-app

For the eager, here is a link to my full pom:

Starter project:

This incubator project from the Apache Foundation demos drinking from the twitter hose with twitter4j and fishing in the streams with Java, Clojure, Python, and Ruby. Very cool and very powerful..

https://github.com/apache/storm/tree/master/examples/storm-starter

Testing Storm Topologies in Clojure:

http://www.pixelmachine.org/2011/12/17/Testing-Storm-Topologies.html

READ this to give your clojure workflow more flow

Clojure and Java are siblings on the JVM; they should play nicely together. Maven enables them to be easily mixed together in the same project or between projects. For a more indepth example of creating and consuming libraries written in Clojure, see Michael Richards’ article detailing how to use Clojure to implement interfaces defined in Java. He uses a FactoryMethod to abstract the mechanics of getting the implementation back into Java, which make’s the Clojure code virtually invisible from an API perspective. Very nice. Here’s the link:

http://michaelrkytch.github.io/programming/clojure/interop/2015/05/26/clj-interop-require.html

Happy hacking!..

As I started using coding agents more, development got faster in general. But as usually happens, when some roadblocks disappear, other inconveniences become more visible and annoying. Yeah… this time it was Clojure startup time.

In one of my bigger projects, the Defold editor, lein test can spend 10 to 30 seconds loading namespaces to run a test that itself takes less than a second. This is fine when you run a full test suite, but painful for agent-driven iteration. And yes, I know about Improving Dev Startup Time, and no, it does not help enough.

While it would be nice to improve startup time, it would be even better for an agent to actually use the REPL-driven development workflow that Clojure is designed for. Agents won’t naturally do it unless nudged in the right direction. So today I took the time to set it up, and I’m so happy with it that I want to share the experience!

To make an agent use RDD, it needs to find a REPL, send a form to it, and get a result back. Doing that is surprisingly easy: one convention for discovery, one tiny script, and one skill prompt.

The important part is discoverability. I prefer to use socket REPLs, but I don’t want to pass ports around; the agent should find a running REPL by itself.

To do that, I added a Leiningen injection in a :user profile that:

clojure.core.server REPL on a random port.repls/pid-{process-pid}.port in the current directory used to start a Leiningen project.repls/.gitignore exists and ignores everything in that directoryThis makes it easy to discover the REPLs programmatically while keeping git clean.

To find the port, I vibe-coded an eval.sh script that:

.repls to find a running REPLncThe implementation is trivial, so there is no point in sharing it here. The idea is what matters: find the port in a known location and pipe input forms to the REPL server.

I added a Codex skill that points to this script and includes common patterns, such as:

./eval.sh '(in-ns (quote clojure.string)) (join "," [1 2 3])'

./eval.sh '(binding [clojure.test/*test-out* *out*] (clojure.test/run-tests (quote test-ns)))'

Iterate in small steps, etc. You know the drill.

First of all, agentic engineering got noticeably faster. With a warm REPL, Codex can run many small checks while iterating on code.

But what impressed me more is that it was actually quite capable of using the REPL to iterate in a running VM. For example, when it wanted to check a non-trivial invariant in a function it was working on, it used fuzzing to generate many examples to see whether the implementation worked as expected. That was cool and useful! I don’t typically do that in the REPL myself.

Turns out Codex can do REPL-driven development quite well!

Building a composable plotting API in Clojure, from views and layers to scatterplot matrices

Using modern interop tools such as libpython‑clj, developers can integrate Clojure’s machine-learning capabilities with Python’s extensive ecosystem without incurring unnecessary overhead. Teams can now perform numerical computing in Clojure while leveraging the full power of NumPy’s C extensions for vectorization, broadcasting, and linear algebra.

When teams directly import NumPy arrays into the Clojure workflows, they get the best of both worlds: Clojure’s functional style and concurrency, plus NumPy’s raw performance. For teams developing AI software, this just makes sense. It is a smoother path to scalable, production-ready software solutions- without having to compromise.

If developers want Clojure and Python to work together, libpython-clj sets the bar. They get direct access to NumPy, SciPy, and Scikit‑learn without extra layers or complications. Thanks to zero-copy memory mapping, data moves between the JVM and CPython without a hitch. Developers won’t waste time converting data in both directions.

Flexiana has strong expertise in connecting Clojure and Python for machine learning, and several detailed case studies demonstrate how libpython-clj enables teams to use major tools such as NumPy, SciPy, and scikit-learn in production environments. What stands out is how these examples show that developers do not have to choose between Python’s fast research ecosystem and Clojure’s rock-solid stability—they can leverage the strengths of both. This balanced approach helps teams build scalable, production-ready software solutions for real-world projects.

If developers want to handle data directly in Clojure, tech.ml.dataset is the go-to option. It is the closest thing to Pandas on the JVM. The best part? It plugs straight into libpython-clj, allowing data transfer between the JVM and CPython without extra copies. Teams can use Clojure to prepare and manage their datasets before sending them to NumPy for intensive computational tasks.

Pandas vs. tech.ml.dataset

| Feature | Pandas (Python) | tech.ml.dataset (Clojure) |

| Columns | Flexible column types | Strongly typed columns |

| Indexing | Labels and multi‑indexing | Functional style indexing |

| Data Sharing | Needs serialization | Zero‑copy with libpython‑clj |

| Interop | Works inside Python only | Connects directly with NumPy |

Does it not require Python? Neanderthal is a powerhouse for numerical computing in Clojure. It is fast- built on BLAS and LAPACK, and if teams want GPU action, it connects to CUDA and OpenCL. Neanderthal needs direct GPU access without Python; it runs well in the JVM.

| Feature | NumPy (Interop) | Neanderthal (Native) |

| Ecosystem | Python ML libraries (SciPy, scikit‑learn, PyTorch) | Focused on linear algebra, deep learning, and JVM tools |

| Performance | Very High — native BLAS/LAPACK via C extensions | Very High — native BLAS/LAPACK with JVM-native integration (no Python interop) |

| Ease of Use | Familiar to Python developers | Steeper learning curve for Clojure developers |

| Memory | Shared via libpython-clj | Native JVM/Off‑heap |

| GPU Support | CuPy/PyTorch interop | Built‑in CUDA/OpenCL |

| Integration | Works best in hybrid workflows | Best for JVM‑only projects |

| Community | Large Python community, many tutorials | Smaller but focused Clojure community |

| Deployment | Common in research and prototyping | Strong fit for production JVM systems |

| Flexibility | Wide range of ML libraries | Specialized for numerics and performance |

This table shows the trade‑offs clearly:

NumPy interop is a good choice if teams already work in Python and want access to its machine learning libraries. Neanderthal is better when teams need maximum speed, GPU acceleration, and want to stay fully inside the JVM.

To begin with, add libpython-clj to the deps.edn file. That is the bridge between Clojure machine learning and Python’s numerical stack.

{:deps {clj-python/libpython-clj {:mvn/version "2.024"}}}Now, double-check where Python is installed on the system. Clojure requires a path to load libraries such as NumPy. Developers need to point to their Python interpreter or virtual environment.

Once developers have configured the dependencies, they can import Python libraries directly into their REPL.

(require-python '[numpy :as np])

;; Simple demo

(def arr (np/array [1 2 3 4]))

(np/sum arr) ;; => 10This quick example shows how developers can create a NumPy array and run a few operations, all from Clojure. It’s proof that NumPy interop works smoothly and that AI software development gets the ideal combination: Python’s speed with Clojure’s structure.

Here’s where things get really interesting. With tech.v3.dataset, you can move data between the JVM and CPython without making extra copies. This is called zero‑copy integration.

This setup makes Clojure a real contender for numerical computing. Developers are not merely connecting two languages. They are building scalable software solutions that can handle complex, real-world tasks.

Use Clojure’s sequence functions for ETL. They make cleaning and shaping data pretty effortless.

Transfer the ready-made data to NumPy and let Python do the math.

👉 Check out the NumPy official docs for more details.

Once the data is ready, load models from Scikit‑learn or PyTorch.

Clojure maintains deployment stability, while Python’s ML ecosystem manages the models.

The pipeline uses Clojure and Python, where they work best. Teams get:

Developers can scale their software while leveraging the best features of both languages.

Clojure’s REPL makes coding fast- write, change, and run code on the spot. That loop makes it easy to test ideas. In AI software development, where teams often need to experiment extensively, that speed makes a difference. Sharing snippets and testing together keeps work moving. It is simply a smoother way to work, especially when everyone is collaborating to solve tough problems.

Python’s math libraries often change developers’ data in place, which can lead to unexpected side effects. With Clojure for machine learning, they can integrate NumPy into a functional workflow. Their data remains predictable, functions do not modify the external state, and debugging becomes less painful. They spend less time chasing weird bugs or wondering why their output changed. What is the end result for teams working on numerical computing in Clojure? Code is clean, pipelines are stable, and growth is easier.

On the JVM, Clojure manages heavy workloads with real concurrency. Combine with NumPy interop to speed up numerical computations, and teams get an environment that can handle huge datasets without slowing down. Flexiana has seen real drops in latency when they combine JVM concurrency with NumPy’s speed in their ML pipelines. It is not just about raw speed- this setup lets teams scale up confidently, with the assurance their system won’t fail when the load grows.

Clojure handles concurrency and orchestration. It also handles enterprise tasks. NumPy manages the math work. Together, they produce an accurate and efficient pipeline. Developers get both performance and stability, so they don’t have to choose. If the team needs to manage distributed workloads while handling heavy numeric processing, this approach works well. Tools like libpython-clj1 tie everything together, making integration feel seamless. It is a solid way to build hybrid systems that actually last.

Data coordination between Clojure and Python is challenging. If teams are not paying attention, they will end up copying large datasets multiple times, wasting memory and slowing everything down.

How to avoid it: Go for zero-copy integration whenever possible, using tools like libpython-clj1 or tech.ml.dataset. Do as much as teams can in Clojure, and only bring in NumPy when it is really needed for that speed. Always monitor memory usage when dealing with large arrays.

Interop is great, but there is an overhead involved. When developers repeatedly call Python functions from Clojure in a tight loop- thousands of times- performance drops drastically.

How to avoid it: Batch the work. Push large chunks of data to NumPy and allow it to process the calculations. Keep the control flow in Clojure and cut down on all those frequent back-and-forth calls.

Clojure runs on the JVM, which indicates it is built for concurrency. But if teams forget to design for it, workloads jam up. Python’s GIL limits running things in parallel on the Python side.

How to avoid it: To handle concurrent processes, rely on Clojure’s concurrency tools- atoms, refs, agents, and futures. Let Python focus on numerical computation, while Clojure runs the show and scales things up. It helps to avoid running into the GIL’s roadblocks.

Interop setups tend to fail when a developer moves from the laptops to production- wrong paths, missing dependencies, corrupted environments. On-site equipment might suddenly fail at other locations.

How to avoid it: Test the startup scripts. Ensure the Python interpreter is configured correctly, with automated CI/CD checks to quickly identify issues.

Clojure brings everything together smoothly. It consolidates the entire pipeline into one place without creating confusion. Developers can connect Python libraries, JVM tools, and their own logic pretty fast- no mountains of boilerplate, just straight to the real problems. And with the REPL, Developers are not stuck waiting for long builds. Quick adjustments and tests keep projects on track.

Clojure sticks to a functional style, so the code stays clean, and the data flows in a way that actually makes sense. Side effects remain under control. When your pipeline gets bigger, you spot bugs early, and resolving them doesn’t become a hassle. New people can jump in and understand what’s happening without getting confused, which makes onboarding much easier. Bottom line: fewer nasty surprises, easier upkeep.

The JVM has been around forever, and people trust it. Years of tweaking, monitoring, and deploying mean it just works. NumPy runs efficiently, so systems scale up and handle heavy loads with ease. It remains fast and stable as workloads increase.

The REPL makes small changes easy to test. Fast result sharing keeps everyone in sync. Teams scale with ease thanks to clear feedback that shows changes.

Clojure connects easily to Python, Java, and JVM tools. It plays nicely with the enterprise tools that teams already have, and they still get access to Python’s whole ML world. Teams arenot required to choose sides- they can use what works best from both. They get the freedom to bring in new tools without breaking what is already working.

Q1: Does libpython‑clj make code slow?

Not really. The primary slowdown stems from repeatedly switching between Clojure and Python. For heavy numerical stuff, that extra cost barely matters compared to how fast NumPy runs.

Q2: Can I use this in production?

Absolutely. Real-world teams rely on it for JVM reliability and Python’s ML strength. Test startup and deployment the same way you test other tools.

Q3: How is memory usage?

Both the JVM and Python consume resources. Pay attention to memory, especially if you’re working with huge datasets.

Q4: Is libpython-clj still maintained?

Indeed. The Clojure community ensures compatibility with the latest versions of Python and keeps it up to date.

Q1: What about the Python GIL?

Python code still runs under the Global Interpreter Lock. Clojure handles concurrency separately, so workloads scale, whereas Python handles concurrency internally.

Q2: Does it support parallel workloads?

Yes. Clojure gives you concurrency tools like atoms, refs, agents, and futures, all running on the JVM, which is built for scale. Python handles numerical processing.

Q1: Does it support GPU acceleration?

While Python libraries such as TensorFlow, PyTorch, and CuPy support GPU acceleration, libpython-clj itself is only an interop layer and neither enables nor restricts GPU usage.

Q2: Is it compatible with virtual environments?

Yes. Set libpython-clj to your Python virtual environment to keep dependencies simple.

Q3: Can I mix and match multiple Python libraries?

Yes. Import and use any Python library you want, just like you would in Python. Clojure ties everything together.

Q1: How difficult is debugging?

Quite simple. Errors show up directly in Clojure, and the REPL makes it easy to try code in small steps.

Q2:Does the REPL help collaboration?

Definitely. The REPL makes quick tests easy, and results are simple to share.

Hybrid ML pipelines are gaining popularity quickly. Teams want the dependable stability from the JVM, but they are not willing to give up Python’s powerhouse ML libraries. So, rather than choosing one, an increasing number of projects use both. Combining them makes it way easier to scale up, keep things running smoothly, and adjust quickly as the workload changes.

Interop tools like libpython-clj1 are no longer just for experimentation. These days, libpython-clj1 is the go-to for integrating Clojure and Python in real production code. Developers can import NumPy, SciPy, or Scikit-learn right from Clojure- no awkward workarounds. As more teams join, tools like this are becoming the backbone of hybrid pipelines.

Zero-copy integration is already a game-changer. Eliminating data duplication saves time and memory. Looking ahead, there is room to further improve it. Think faster pipelines, better support for huge datasets, smoother GPU acceleration, and handling complicated data structures without the usual headaches. All this will further reduce overhead and make everything feel almost effortless.

At Flexiana, we have seen hybrid ML pipelines move out of the “experimental” corner and take center stage for big companies. Here is where things are headed by 2026 and beyond:

The direction is clear: Hybrid ML pipelines are not a passing trend. They are the new normal, enabling developers to leverage the best tools from both worlds and get things done.

Clojure machine learning brings the rock-solid reliability of the JVM, while NumPy offers that raw speed Python’s known for to process numerical data. Combine them, and developers get a hybrid ML pipeline that does not force them to choose between stability and performance. With tools like libpython-clj1, moving data between the two just works. Team can access enterprise-level concurrency and fast numerical work at the same time- no compromises, especially if teams are pushing the limits of numerical computing in Clojure.

Bringing these strengths together enables teams to move faster in AI software development, test new ideas without getting stuck, and keep their codebase clean and scalable as their needs grow. It is a practical setup- flexible, efficient, and ready to handle whatever real-world demands come their way.

If you are ready to kick off your own hybrid ML pipeline, Flexiana can help you blend JVM reliability with NumPy speed. Let’s get started.

The post Clojure + NumPy Interop: The 2026 Guide to Hybrid Machine Learning Pipelines appeared first on Flexiana.

bin/launchpad

Close to four years ago we released Launchpad, a tool which has been indispensible in our daily dev workflow ever since. In the latest Clojure Survey, over 85% of respondents indicated "The REPL / Interactive Development" is important for them. We already explained at length in our Why Clojure what exactly we mean when we say "Interactive Development", and why it is so important.

In order to do Interactive Development, you need a live process to interact with, connected to your editor or IDE. This is where Launchpad comes in. It focuses on doing one thing well: starting that Clojure process for you to interact with, with all the bits and bobs that make for a pleasant and productive environment. It&aposs a simple idea, and we&aposve heard from numerous people how it has made their life easier. But not everyone seems to get it.

Ovi Stoica has been doing great work on ShipClojure, and as part of that work he created leinpad, a Launchpad for Leiningen users. It&aposs been very validating to see people pick up these same ideas and run with them. Launchpad is for Clojure CLI only, we switched away from Leiningen as soon as the official CLI came out in 2018 and never looked back. Leiningen vs Clojure CLI is perhaps a topic we can dig into in another newsletter, but needless to say it&aposs good to see a Launchpad alternative for people still using Leiningen, by need or by choice.

The Leinpad release announcement sparked some interesting discussion on Clojurians Slack, going back to some of the Launchpad design decisions. Launchpad strongly recommends that people create a bin/launchpad script, just like we also recommend that people create a bin/kaocha script to invoke the Kaocha test runner. In both cases there are two related reasons why we feel strongly about this. We want both of these to become universal conventions, so that when you start working on a Clojure project for the first time, you can safely assume that bin/kaocha runs your tests, and bin/launchpad starts your development process. In any project you can put something in that location that does that job, regardless of your stack and setup. It&aposs a form of late binding, and it means a new hire doesn&apost need to pore over the README, or worse, ask around, to know how they&aposre supposed to run the tests or run a dev environment.

Each time people instead decide they prefer bb run launchpad, or clj -X:kaocha, or any other variant, they break the predictive power of that convention. They muddy the water for everyone. This is why we resisted a -X style entrypoint for Kaocha, despite later accepting a community contribution that implements one. A convention is only as powerful as its adoption rate.

Besides being a convention, it&aposs also a place where you can customize the Launchpad (or Kaocha) behavior. For Launchpad this is especially important because the goal for the bin/launchpad script is to be a one-stop-shop for your entire dev environment. That can mean installing npm packages, running docker containers, loading stub data, anything you need so that a new contributor can arrive, run bin/launchpad, and be productive.

Recently a team member had trouble running Launchpad because their babashka version was out of date. They reached for the tool they usually use when confronted with "works on my machine" issues: Nix. Nix ensures a reproducible environment, where everyone is using the exact same versions and packages. It&aposs like tools.deps but for system software, and solves some of the issues around Phantom Dependencies.

This meant replacing the bb shebang with nix-shell. This shows the power of a filesystem convention like bin/launchpad. The facade stays the same, but what&aposs under the covers is now radically different.

-#!/usr/bin/env bb

+#!/usr/bin/env nix-shell

+#! nix-shell -i bb -p babashka

+#! nix-shell -I nixpkgs=https://github.com/NixOS/nixpkgs/archive/refs/tags/25.11.tar.gzLater on I introduced GStreamer to the project, to support multimedia playback. This too is (partially) a system dependency, and some people on the team struggled to get it working. In this case I was able to build on the nix-shell approach, adding the additional dependencies. So instead of adding a section to the README that explains how to either brew or apt install gstreamer, I added the necessary bits to our one-stop-shop. And all the while it&aposs still just bin/launchpad. I&aposd love to see a cultural shift where we no longer accept ten steps of outdated instructions in a README just to get a dev environment. Dev tooling is part of our job, and we do ourselves, our team members, and our employers an injustice by treating it with any less care than we give to the customer facing bits of our software.

At Gaiwan we share interesting reads and resources in our #tea-break channel.

EU Tech Map and European Alternatives: We care about EU&aposs software rebellion to move away from American companies and doing things in-house, supporting OSS, etc. It also sets a benchmark and a path for other countries to follow.

The job losses are real — but the AI excuse is fake by David Gerard. Layoffs are done for economic reasons, but blamed on AI because that sounds better, and meanwhile the whole situation is used to get people to accept lower job offers. What&aposs your experience with this?

An AI Agent Published a Hit Piece on Me by Scott Shambaugh. In case you haven&apost seen it already, this is 🤯

One or more of us is considering this really cool open source Linux device, by an Indian team. And not as expensive as you might have thought. (We are not compensated for writing about this Kickstarter project; we really just think it&aposs cool.) So many devices, so little time...

Datastar and Clojure, the ethics of generative AI, and working with GitLab encrypted OpenTofu state.

This time we have plenty of material: from a fascinating interview with Kotlin’s creator, through a groundbreaking change in Minecraft, to a whole wave of new projects proving that Java UI is in much better shape than anyone would expect. Let’s dive in!

Gergely Orosz on his Pragmatic Engineer podcast conducted an extensive interview with Andrey Breslav, the creator of Kotlin and founder of CodeSpeak. The conversation is a goldmine of little-known facts about Kotlin’s history - for example, that the first version of Kotlin wasn’t a compiler but an IDE plugin, that the initial team consisted mainly of fresh graduates, or that smart casts were inspired by the obscure language Gosu.

But the most interesting thread concerns the future. Breslav is now building CodeSpeak - a new programming language designed to reduce boilerplate by replacing trivial code with concise natural language descriptions. The motivation? Keeping humans in control of the software development cycle in the age of LLM agents. As he put it - in the future, engineers will still be building complex systems, and it’s worth remembering that, even if Twitter is trying to convince us otherwise (heh).

Moderne announced that their OpenRewrite platform - known primarily in the Java ecosystem as a tool for automatic code refactoring - officially now supports Python. Python code can now be parsed, analyzed, and transformed alongside Java and JavaScript within the same Lossless Semantic Tree (LST).

Why does this matter for us JVM folks? Because modern systems rarely evolve in isolation. A Java service might expose an API consumed by a Python integration, a shared dependency might appear in backend services, frontend tooling, and automation scripts. With Python in the LST, dependency upgrades can be coordinated in a single campaign across multiple languages.

An interesting extension to the mission of one of the JVM ecosystem’s most important tools.

Igor Souza wrote a fun article celebrating the 40th anniversary of Legend of Zelda, comparing the triangle of performance improvements in Java 25 to the legendary Triforce. The analogy connects three key improvements: optimized class caching (AOT caching) for faster startup, Compact Object Headers for more efficient memory use, and garbage collection improvements. Java “Power, Wisdom, Courage” - genuinely a lovely connection with gaming lore.

Continuing the video games related topics, big news from the gamedev side of JVM - Mojang announced that Minecraft Java Edition is switching from OpenGL to Vulkan as part of the upcoming “Vibrant Visuals” update. This is a massive change for one of the most popular games written in Java. The goal is both visual improvements and better performance. Mojang confirmed that the game will still support macOS and Linux (on macOS through a translation layer, since Apple doesn’t natively support Vulkan).

Interestingly, this is probably the first Java game to use Vulkan. Modders should start preparing for the migration - moving away from OpenGL will require more effort than a typical update. Snapshots with Vulkan alongside OpenGL are expected “sometime in summer,” with the ability to switch between them until things stabilize.

PS: I started to introduce my daughter to Minecraft ❤️ Fun time.

JetBrains announced that starting from version 2026.1 EAP, IntelliJ-based IDEs will run natively on Wayland by default. This is a significant step, particularly since Wayland has become the default display server in most modern Linux distributions.

One small difference in practice - the startup splash screen won’t be displayed, because it can’t be reliably centered on Wayland.

Positioning things is one of the hardest things in IT.

Bruno Borges published a practical guide on configuring JDK 25 for GitHub Copilot Coding Agent - the ephemeral GitHub Actions environment where the agent builds code and runs tests. By default, the agent uses the pre-installed Java version on the runner, which can lead to failed builds if the project requires newer features. The solution? A dedicated copilot-setup-steps workflow with actions/setup-java.

Short, concrete, and useful if you’re starting to experiment with coding agents in Java projects.

SkillsJars is a new project showcased on the Coffee + Software livestream (Josh Long, DaShaun Carter, James Ward ) - a registry and distribution platform for Agent Skills via... Maven Central.

The idea is simple and simultaneously crazy in the best possible way: Agent Skills (the SKILL.md format introduced by Anthropic for Claude Code, also adopted by OpenAI Codex) are modular packages of instructions, scripts, and resources that extend AI agent capabilities - e.g., a skill for creating .docx documents, debugging via JDB, or building MCP servers. SkillsJars packages these skills as JAR artifacts and publishes them on Maven Central under the com.skillsjars group, with full support for Maven, Gradle, and sbt.

The catalog already includes a Spring Boot 4.x skill (best practices, project structure, configuration), an agentic JDB debugger by Bruno Borges, a browser automation skill (browser-use), and official Anthropic skills for creating presentations, PDFs, frontend design, and building MCP servers. Anyone can publish their own skill - just point it to a GitHub repo.

Maven Central as a package manager for agentic AI - we truly live in interesting times.

Johannes Bechberger from the SapMachine team at SAP created a fun quiz where you have to guess the minimum Java version required from a code snippet. Over 30 years, Java added generics, lambdas, pattern matching, records... and it turns out most of us can’t precisely recall which feature arrived in which version.

A perfect way to kill five minutes over coffee (or an hour, if you’re as nerdy as I am).

If you think it’s too easy... I dare you to try Java Alpha version with alpha features 😉

Akamas published an analysis of the state of Java on Kubernetes based on thousands of JVMs in production.

The findings? Despite it being 2026, most Java workloads on K8s run with default settings that actively hurt performance. 60% of JVMs have no Garbage Collector configured, most heap settings are at defaults, and a significant portion of pods run with less than 1 CPU or less than 1 GiB RAM - which is a serious bottleneck for Java’s multi-threaded architecture. An old problem, but the data is striking.

PS: Ergonomic Profiles was always a great Idea IMHO.

JetBrains released an extension for Visual Studio Code that enables converting Java files to Kotlin. The converter (J2K) uses the same engine as IntelliJ IDEA. Just open a .java file, right-click, and select “Convert to Kotlin.”

An interesting move - JetBrains clearly wants the Kotlin ecosystem to expand beyond their own IDE.

Robin Tegg, whose piece on Java UI landed in the newsletter two weeks ago, created an awesome page - a comprehensive guide to Java UI frameworks, from desktop (JavaFX, Swing, Compose Desktop) through web (Vaadin, HTMX, Thymeleaf) to terminal (TamboUI, JLine, Lanterna). The motivation? Frustration with outdated articles referencing dead libraries. The result is the best single source of knowledge on the current state of Java UI in 2026.

If anyone tells you “you can’t do UI in Java” - send them this link.

Scala Survey 2026 - VirtusLab and the Scala Center have launched their annual community survey, and if you’re using Scala in any capacity, your 5 minutes can directly shape the language’s roadmap, library ecosystem, and tooling priorities. The survey evaluates Scala adoption patterns, pain points, and what the community actually needs - and the results have historically influenced real decisions about where development effort goes.

Take the survey here. Whether you’re a daily Scala developer or someone who occasionally dips into the ecosystem, your perspective matters. That’s unique possibility to shape the language

To wrap this section - 100 most-watched presentations from Java conferences in 2025 is a solid list to catch up on. And the article on 10 modern Java features that let you write 50% less code is a good refresher, especially for those who are mentally stuck on Java 8.