OSS updates May and June 2025

In this post I&aposll give updates about open source I worked on during May and June 2025.

To see previous OSS updates, go here.

Sponsors

I&aposd like to thank all the sponsors and contributors that make this work possible. Without you the below projects would not be as mature or wouldn&apost exist or be maintained at all! So a sincere thank you to everyone who contributes to the sustainability of these projects.

Current top tier sponsors:

Open the details section for more info about sponsoring.

If you want to ensure that the projects I work on are sustainably maintained, you can sponsor this work in the following ways. Thank you!

- Github Sponsors

- The Babaska or Clj-kondo OpenCollective

- Ko-fi

- Patreon

- Clojurists Together

Updates

Here are updates about the projects/libraries I&aposve worked on in the last two months, 19 in total!

babashka: native, fast starting Clojure interpreter for scripting.

- Bump edamame (support old-style

#^metadata) - Bump SCI: fix

satisfies?for protocol extended tonil - Bump rewrite-clj to

1.2.50 - 1.12.204 (2025-06-24)

- Compatibility with clerk&aposs main branch

- #1834: make

taoensso/trovework in bb by exposing anothertimbrevar - Bump

timbreto6.7.1 - Protocol method should have

:protocolmeta - Add

print-simple - Make bash install script work on Windows for GHA

- Upgrade Jsoup to

1.21.1 - 1.12.203 (2025-06-18)

- Support

with-redefs+intern(see SCI issue #973 - #1832: support

clojure.lang.Var/intern - Re-allow

initas task name - 1.12.202 (2025-06-15)

- Support

clojure.lang.Var/{get,clone,reset}ThreadBindingFramefor JVM Clojure compatibility - #1741: fix

taoensso.timbre/spyand include test - Add

taoensso.timbre/set-ns-min-level!andtaoensso.timbre/set-ns-min-level - 1.12.201 (2025-06-12)

- #1825: Add Nextjournal Markdown as built-in Markdown library

- Promesa compatibility (pending PR here)

- Upgrade clojure to

1.12.1 - #1818: wrong argument order in

clojure.java.io/resourceimplementation - Add

java.text.BreakIterator - Add classes for compatibility with promesa:

java.lang.Thread$Builder$OfPlatformjava.util.concurrent.ForkJoinPooljava.util.concurrent.ForkJoinPool$ForkJoinWorkerThreadFactoryjava.util.concurrent.ForkJoinWorkerThreadjava.util.concurrent.SynchronousQueue

- Add

taoensso.timbre/set-min-level! - Add

taoensso.timbre/set-config! - Bump

fsto0.5.26 - Bump

jsoupto1.20.1 - Bump

edamameto1.4.30 - Bump

taoensso.timbreto6.7.0 - Bump

pods: more graceful error handling when pod quits unexpectedly - #1815: Make install-script wget-compatible (@eval)

- #1822:

typeshould prioritize:typemetadata ns-nameshould work on symbols:clojure.core/eval-fileshould affect*file*during eval- #1179: run

:initin tasks only once - #1823: run

:initin tasks before task specific requires - Fix

resolvewhen*ns*is bound to symbol - Bump

deps.cljto1.12.1.1550 - Bump

http-clientto0.4.23

- Bump edamame (support old-style

SCI: Configurable Clojure/Script interpreter suitable for scripting

- 0.10.47 (2025-06-27)

- Security issue: function recursion can be forced by returning internal keyword as return value

- Fix #975: Protocol method should have :protocol var on metadata

- Fix #971: fix

satisfies?for protocol that is extended tonil - Fix #977: Can&apost analyze sci.impl.analyzer with splint

- 0.10.46 (2025-06-18)

- Fix #957:

sci.async/eval-string+should return promise with:val nilfor ns form rather than:val <Promise> - Fix #959: Java interop improvement: instance method invocation now leverages type hints

- Bump edamame to

1.4.30 - Give metadata

:typekey priority intypeimplementation - Fix #967:

ns-nameshould work on symbols - Fix #969:

^:clojure.core/eval-filemetadata should affect binding of*file*during evaluation - Sync

sci.impl.Reflectorwith changes inclojure.lang.Reflectorin clojure 1.12.1 - Fix

:static-methodsoption for class with different name in host - Fix #973: support

with-redefson core vars, e.g.intern. The fix for this issue entailed quite a big refactor of internals which removes "magic" injection of ctx in core vars that need it. - Add

unchecked-setandunchecked-getfor CLJS compatibility

clerk: Moldable Live Programming for Clojure

- Make clerk compatible with babashka

quickblog: light-weight static blog engine for Clojure and babashka

- 0.4.7 (2025-06-12)

- Switch to Nextjournal Markdown for markdown rendering The minimum babashka version to be used with quickblog is now v1.12.201 since it comes with Nextjournal Markdown built-in.

- Link to previous and next posts; see "Linking to previous and next posts" in the README (@jmglov)

- Fix flaky caching tests (@jmglov)

- Fix argument passing in test runner (@jmglov)

- Add

--dateto api/new. (@jmglov) - Support Selmer template for new posts in api/new; see Templates > New posts in README. (@jmglov)

- Add &aposlanguage-xxx&apos to pre/code blocks

- Fix README.md with working version in quickstart example

- Fix #104: fix caching with respect to previews

- Fix #104: document

:previewoption

edamame: configurable EDN and Clojure parser with location metadata and more

- 1.4.31 (2025-06-25)

- Fix #124: add

:importstoparse-ns-form - Fix #125: Support

#^:foodeprecated metadata reader macro (@NoahTheDuke) - Fix #127: expose

continuevalue that indicates continue-ing parsing (@NoahTheDuke) - Fix #122: let

:auto-resolve-nsaffect syntax-quote - 1.4.30

- #120: fix

:auto-resolve-nsfailing case

squint: CLJS syntax to JS compiler

- #678: Implement

random-uuid(@rafaeldelboni) - v0.8.149 (2025-06-19)

- #671: Implement

trampoline(@rafaeldelboni) - Fix #673: remove experimental atom as promise option since it causes unexpected behavior

- Fix #672: alias may contain dots

- v0.8.148 (2025-05-25)

- Fix #669: munge refer-ed + renamed var

- v0.8.147 (2025-05-09)

- Fix #661: support

throwin expression position - Fix #662: Fix extending protocol from other namespace to

nil - Better solution for multiple expressions in return context in combination with pragmas

- Add an ajv example

- #678: Implement

clj-kondo: static analyzer and linter for Clojure code that sparks joy.

- #2560: NEW linter:

:locking-suspicious-lock: report when locking is used on a single arg, interned value or local object - #2555: false positive with

clojure.string/replaceandpartialas replacement fn - 2025.06.05

- #2541: NEW linter:

:discouraged-java-method. See docs - #2522: support

:config-in-nson:missing-protocol-method - #2524: support

:redundant-ignoreon:missing-protocol-method - #2536: false positive with

formatand whitespace flag after percent - #2535: false positive

:missing-protocol-methodwhen using alias in method - #2534: make

:redundant-ignoreaware of.cljc - #2527: add test for using ns-group + config-in-ns for

:missing-protocol-methodlinter - #2218: use

ReentrantLockto coordinate writes to cache directory within same process - #2533: report inline def under fn and defmethod

- #2521: support

:langsoption in:discouraged-varto narrow to specific language - #2529: add

:nsto&envin:macroexpand-hookmacros when executing in CLJS - #2547: make redundant-fn-wrapper report only for all cljc branches

- #2531: add

:namedata to:unresolved-namespacefinding for clojure-lsp

- #2560: NEW linter:

sci.configs: A collection of ready to be used SCI configs.

- A configuration for replicant was added

scittle: Execute Clojure(Script) directly from browser script tags via SCI

nbb: Scripting in Clojure on Node.js using SCI

- 1.3.204 (2025-05-15)

- #389: fix regression caused by #387

- 1.3.203 (2025-05-13)

- #387: bump

import-meta-resolveto fix deprecation warnings on Node 22+ - 1.3.202 (2025-05-12)

- Fix nbb nrepl server for Deno

- 1.3.201 (2025-05-08)

- Deno improvements for loading

jsr:andnpm:deps, including react in combination with reagent - #382: prefix all node imports with

node:

quickdoc: Quick and minimal API doc generation for Clojure

- v0.2.5 (2025-05-01)

- Fix #32: fix anchor links to take into account var names that differ only by case

- v0.2.4 (2025-05-01)

- Revert source link in var title and move back to

<sub> - Specify clojure 1.11 as the minimal Clojure version in

deps.edn - Fix macro information

- Fix #39: fix link when var is named multiple times in docstring

- Upgrade clj-kondo to

2025.04.07 - Add explicit

org.babashka/clidependency

- 0.7.186

- Make library more GraalVM

native-imagefriendly - 0.7.184

- Consolidate utils in

nextjournal.markdown.utils - 0.7.181

- Hiccup JVM compatibility for fragments (see #34)

- Support HTML blocks (

:html-block) and inline HTML (:html-inline) (see #7) - Bump commonmark to 0.24.0

- Bump markdown-it to 14.1.0

- Render

:codeaccording to spec into<pre>and<code>block with language class (see #39) - No longer depend on

applied-science/js-interop - Accept parsed result in

->hiccupfunction - Expose

nextjournal.markdown.transformthrough mainnextjournal.markdownnamespace - Stabilize API and no longer mark library alpha

- Add

:responseskey with raw responses

- Add

- Add spec for

even?

- Add spec for

http-client: babashka&aposs http-client

- 0.4.23 (2025-06-06)

- #75: override existing content type header in multipart request

- Accept

:request-methodin addition to:requestto align more with other clients - Accept

:urlin addition to:urito align more with other clients

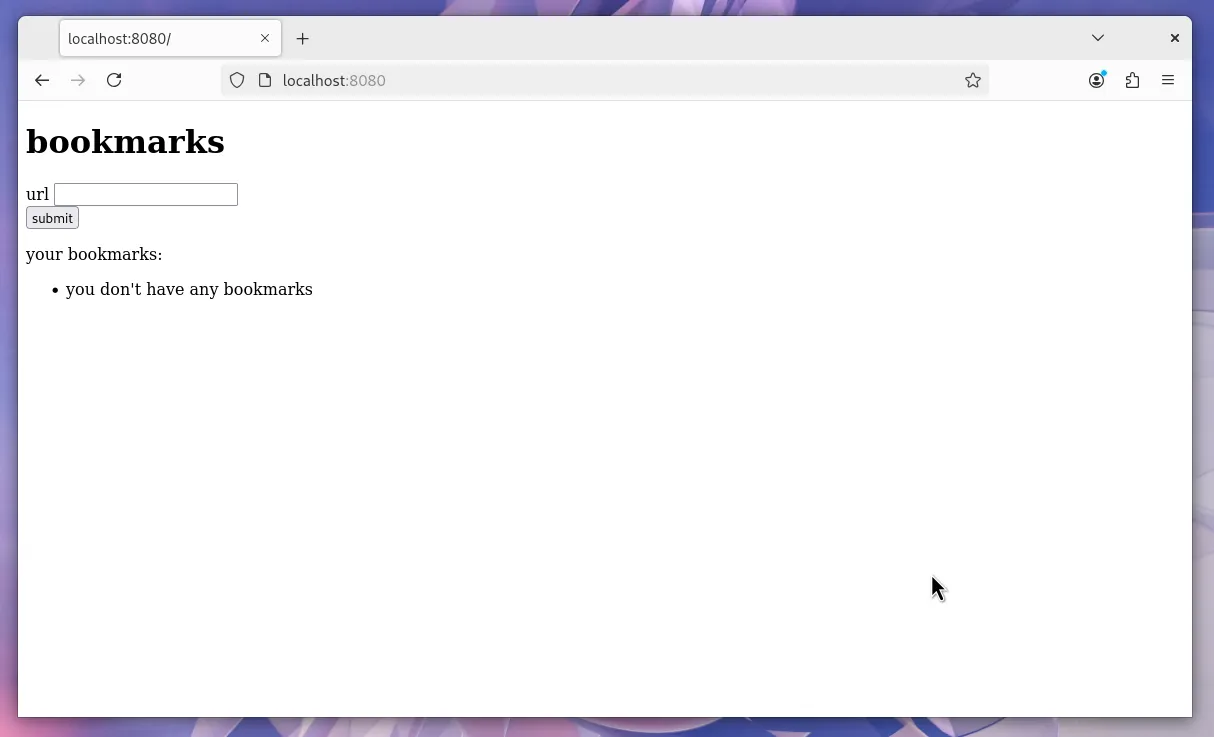

unused-deps: Find unused deps in a clojure project

- This is a brand new project!

fs - File system utility library for Clojure

cherry: Experimental ClojureScript to ES6 module compiler

- Fix

cherry.embedwhich is used by malli

- Fix

deps.clj: A faithful port of the clojure CLI bash script to Clojure

- Released several versions catching up with the clojure CLI

Other projects

These are (some of the) other projects I&aposm involved with but little to no activity happened in the past month.

- [tools](https://github.com/borkdude/tools): a set of [bbin](https://github.com/babashka/bbin/) installable scripts - [sci.nrepl](https://github.com/babashka/sci.nrepl): nREPL server for SCI projects that run in the browser - [babashka.json](https://github.com/babashka/json): babashka JSON library/adapter - [squint-macros](https://github.com/squint-cljs/squint-macros): a couple of macros that stand-in for [applied-science/js-interop](https://github.com/applied-science/js-interop) and [promesa](https://github.com/funcool/promesa) to make CLJS projects compatible with squint and/or cherry. - [grasp](https://github.com/borkdude/grasp): Grep Clojure code using clojure.spec regexes - [lein-clj-kondo](https://github.com/clj-kondo/lein-clj-kondo): a leiningen plugin for clj-kondo - [http-kit](https://github.com/http-kit/http-kit): Simple, high-performance event-driven HTTP client+server for Clojure. - [babashka.nrepl](https://github.com/babashka/babashka.nrepl): The nREPL server from babashka as a library, so it can be used from other SCI-based CLIs - [jet](https://github.com/borkdude/jet): CLI to transform between JSON, EDN, YAML and Transit using Clojure - [pod-babashka-fswatcher](https://github.com/babashka/pod-babashka-fswatcher): babashka filewatcher pod - [lein2deps](https://github.com/borkdude/lein2deps): leiningen to deps.edn converter - [cljs-showcase](https://github.com/borkdude/cljs-showcase): Showcase CLJS libs using SCI - [babashka.book](https://github.com/babashka/book): Babashka manual - [pod-babashka-buddy](https://github.com/babashka/pod-babashka-buddy): A pod around buddy core (Cryptographic Api for Clojure). - [gh-release-artifact](https://github.com/borkdude/gh-release-artifact): Upload artifacts to Github releases idempotently - [carve](https://github.com/borkdude/carve) - Remove unused Clojure vars - [4ever-clojure](https://github.com/oxalorg/4ever-clojure) - Pure CLJS version of 4clojure, meant to run forever! - [pod-babashka-lanterna](https://github.com/babashka/pod-babashka-lanterna): Interact with clojure-lanterna from babashka - [joyride](https://github.com/BetterThanTomorrow/joyride): VSCode CLJS scripting and REPL (via [SCI](https://github.com/babashka/sci)) - [clj2el](https://borkdude.github.io/clj2el/): transpile Clojure to elisp - [deflet](https://github.com/borkdude/deflet): make let-expressions REPL-friendly! - [deps.add-lib](https://github.com/borkdude/deps.add-lib): Clojure 1.12's add-lib feature for leiningen and/or other environments without a specific version of the clojure CLI